How AI's Demand for Energy Transforms IT Procurement Strategies

Most people like

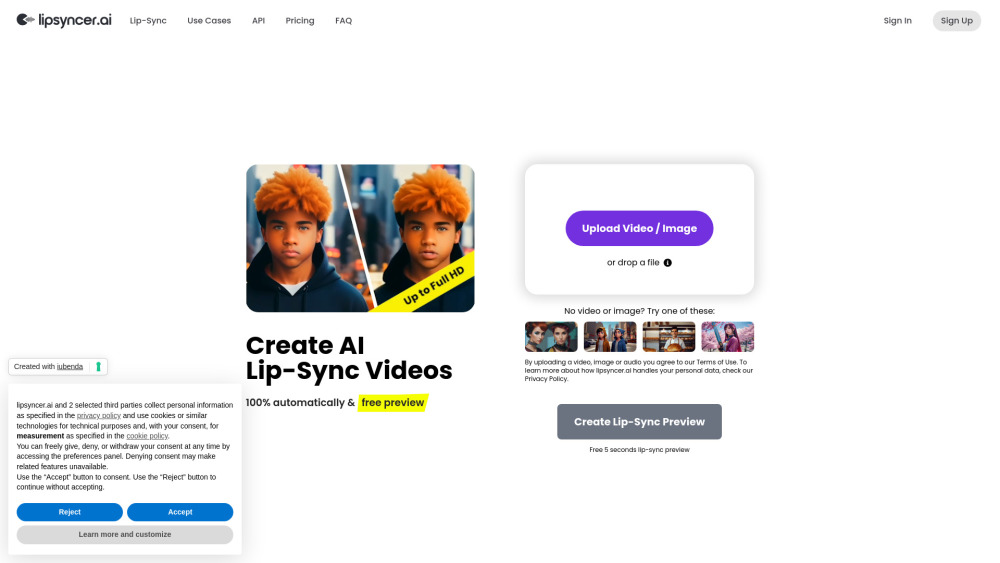

Introducing a cutting-edge platform designed for creating captivating AI-generated lip sync videos. Streamline your content creation process and engage your audience like never before with our intuitive tools and technology. Whether you're a content creator, marketer, or simply looking to have fun, our platform empowers you to produce high-quality lip sync videos effortlessly. Dive in and unleash your creativity today!

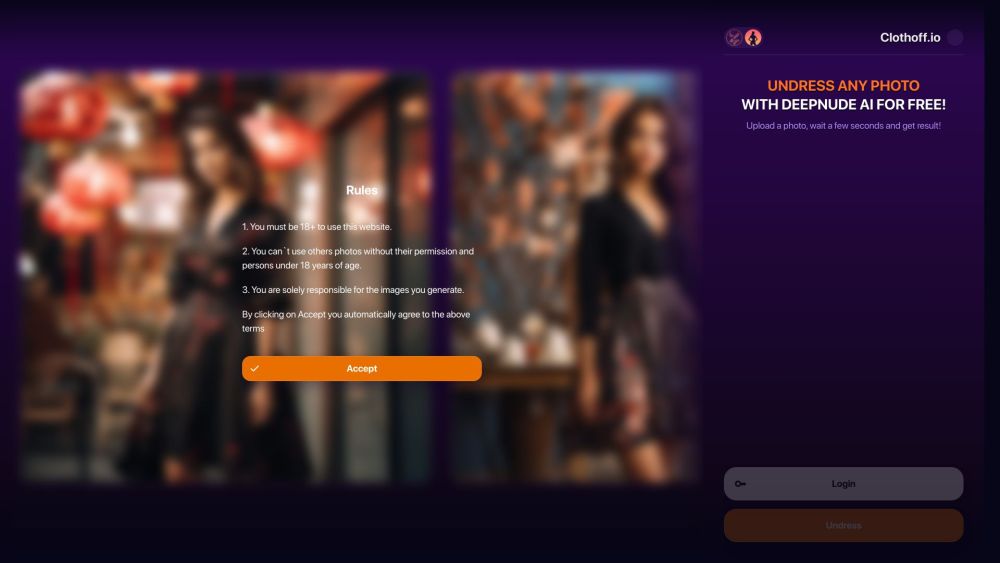

Discover the transformative power of AI technology with our innovative AI clothes remover tool. Designed to enhance your visual content creation, this cutting-edge software effortlessly removes clothing items from images while maintaining the integrity of the background. Whether you're a photographer, fashion designer, or simply looking to enhance your personal projects, our AI-driven solution streamlines the process and improves your productivity. Experience the future of image editing today with our user-friendly AI clothes remover, perfect for all your creative needs.

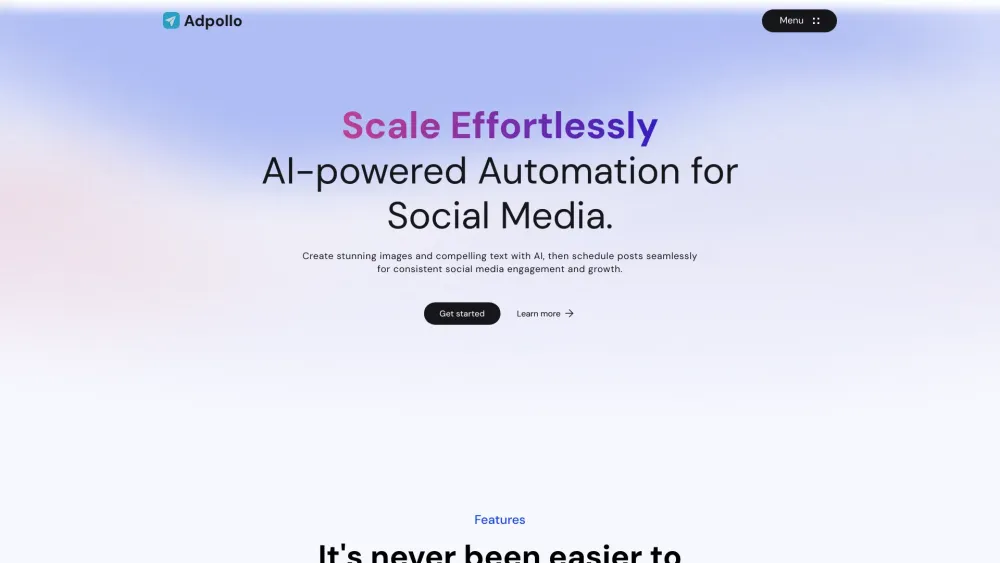

Transform your social media presence with our AI-powered platform designed for seamless content generation and scheduling. Effortlessly create engaging posts that resonate with your audience, and optimize your social media strategy for maximum impact. Whether you're a small business, influencer, or marketer, our tool simplifies the process to help you stay on top of your social media game.

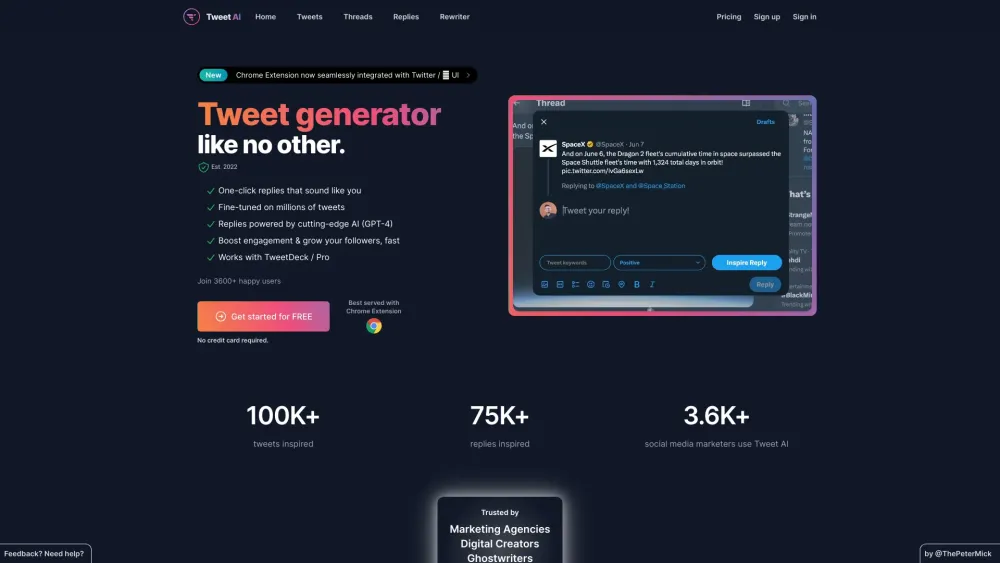

Increase Sales and Engagement on X

In today's competitive market, enhancing sales and boosting audience engagement on X is more crucial than ever. This platform offers unique opportunities to connect with your target audience, drive conversions, and build lasting relationships. By implementing effective strategies tailored to maximize your presence on X, you can elevate your brand's visibility and achieve remarkable results. Ready to transform your approach? Let’s explore how to optimize your sales and engagement on X!

Find AI tools in YBX