"How Facebook Leverages AI to Enhance Content Moderation Efficiency"

Most people like

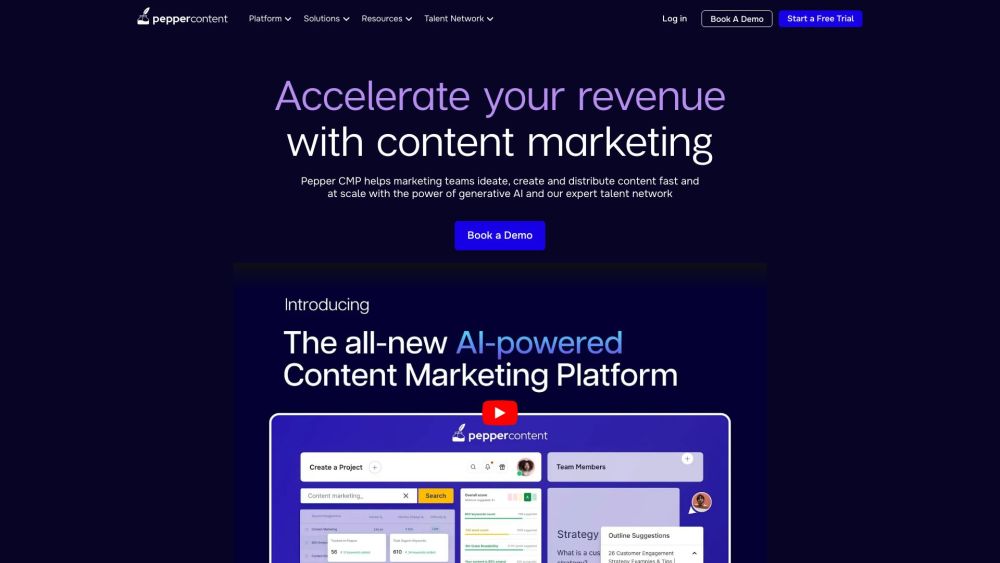

Introducing an AI-Powered Content Marketing Platform

Unlock the potential of your marketing strategy with our cutting-edge AI-driven content marketing platform. Designed to streamline your content creation and distribution, our platform harnesses the power of artificial intelligence to deliver engaging and relevant content tailored to your audience. Transform how you connect with customers, boost your brand visibility, and drive conversions effortlessly. Discover the future of content marketing today!

Babble AI harnesses the power of Chat GPT to develop intelligent chatbots, facilitating seamless and natural interactions that enhance customer engagement.

Discover the transformative power of AI-driven clips that bring podcast content to life. These innovative audio snippets enhance the listening experience by highlighting key moments and insightful discussions, making it easier than ever for audiences to engage with their favorite podcasts. Whether you’re seeking quick highlights or in-depth knowledge, AI-powered podcast clips are your gateway to the best in audio storytelling.

Enhance Efficiency in Grading with AI Solutions

In today’s fast-paced educational landscape, leveraging AI technology can significantly streamline the grading process. By automating tedious tasks and ensuring consistent evaluation, AI tools not only save educators valuable time but also improve the accuracy of assessments. Discover how integrating AI into your grading system can transform the educational experience for both teachers and students alike.

Find AI tools in YBX

Related Articles

Refresh Articles