Seoul Summit: Global Leaders and Corporations Unite for AI Safety Commitment

Most people like

Sivi is an advanced AI tool that swiftly transforms text into striking graphic designs, making it easier than ever to bring your creative visions to life.

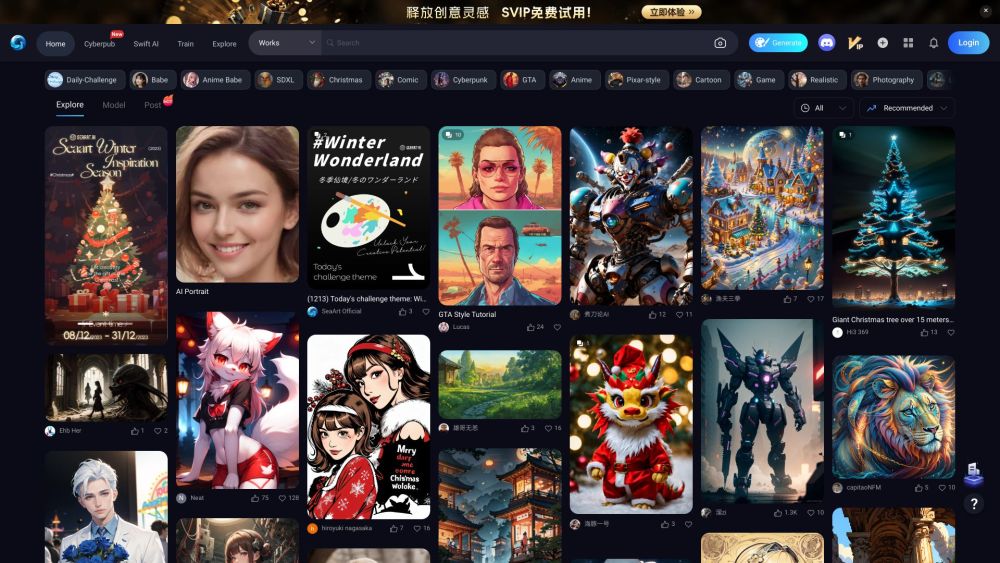

Unlock the Power of AI-Generated Illustrations

Discover the exciting world of AI illustration generation with our innovative platform. Here, creativity meets cutting-edge technology, allowing users to create stunning illustrations effortlessly. Whether you're a professional artist, a designer, or just someone looking to explore your artistic side, our AI-driven tools provide you with endless possibilities to bring your ideas to life. Join us today and transform the way you create visuals!

Introducing an AI-Powered Platform for Enhanced Video Accessibility

In today’s digital world, ensuring video accessibility is crucial for engaging diverse audiences. Our innovative AI-driven platform transforms video content by automatically generating captions, transcripts, and translations, making it accessible to everyone, including individuals with hearing impairments and non-native language speakers. Join us in making video content universally accessible, unlocking the full potential of your media for all viewers.

Unlocking Intelligence for All: Bridging Knowledge and Access

In a world where knowledge is power, our mission is to democratize intelligence. We believe that everyone should have the opportunity to access valuable information and insights, regardless of their background. By breaking down barriers and enhancing understanding, we aim to empower individuals and communities. Join us in this journey to make intelligence accessible for all!

Find AI tools in YBX

Related Articles

Refresh Articles