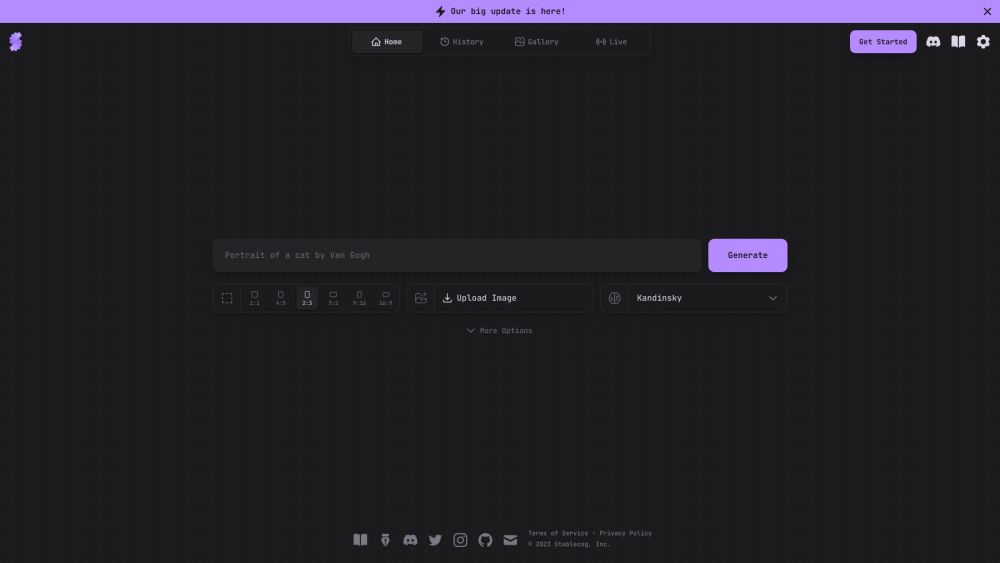

Generating images from simple text prompts with AI has never been faster, thanks to advances from Stability AI, the creator of the widely used Stable Diffusion model.

With the announcement of the SDXL Turbo mode this week, users can now enjoy real-time image generation, eliminating the wait for AI to process prompts. What previously took 50 generation steps now requires only one, drastically reducing computation time. SDXL Turbo can produce a 512×512 image in just 207ms on an A100 GPU, marking a significant improvement over earlier AI diffusion models.

The SDXL Turbo experience mirrors the predictive typing features found in modern search engines, but it applies this speed to image generation in real-time.

Remarkably, this acceleration does not stem from advanced hardware; rather, it is driven by a novel technique called Adversarial Diffusion Distillation (ADD). Emad Mostaque, founder and CEO of Stability AI, explained on X (formerly Twitter), “One step Stable Diffusion XL with our new Adversarial Distilled Diffusion (ADD) approach offers less diversity but much faster results, with more variants expected in the future.”

SDXL – Now Faster

The SDXL base model was introduced in July, and Mostaque anticipated it would serve as a strong foundation for future models. Stable Diffusion competes with other text-to-image models, such as OpenAI’s DALL-E and Midjourney.

A key feature of the SDXL base model is ControlNets, which enhance control over image composition. With 3.5 billion parameters, it offers improved accuracy by comprehending a wider range of concepts. SDXL Turbo builds upon these innovations, enhancing generation speed.

Stability AI is following a growing trend in generative AI development: first producing an accurate model, then optimizing it for performance—similar to OpenAI’s approach with GPT-3.5 Turbo and GPT-4 Turbo.

As generative AI models are accelerated, a common concern is the tradeoff between quality and speed. However, SDXL Turbo demonstrates minimal compromise, delivering highly detailed images that maintain nearly the same quality as its non-accelerated counterpart.

What is Adversarial Diffusion Distillation (ADD)?

The Generative Adversarial Network (GAN) concept is well-known in AI for building fast deep learning neural networks. In contrast, traditional diffusion models utilize a more gradual process, which tends to be slower. ADD merges the advantages of both approaches.

According to the ADD research report, “The aim of this work is to combine the superior sample quality of DMs [diffusion models] with the inherent speed of GANs.”

The ADD method developed by Stability AI researchers aims to outpace other AI methods for image generation, marking the first technique to achieve single-step, real-time image synthesis using foundation models. By combining adversarial training with score distillation, ADD leverages knowledge from a pretrained image diffusion model. The main benefits are rapid sampling while preserving high fidelity and iterative refinement capabilities.

Experiments show that ADD significantly outperforms GANs, Latent Consistency Models, and other diffusion distillation methods in generating images within 1-4 steps.

While Stability AI does not yet consider the SDXL Turbo model ready for commercial use, it is currently available in preview on the company’s Clipdrop web service. Initial tests indicate rapid image generation, although the Clipdrop beta may lack some advanced options for differentiating image styles. The code and model weights are also accessible on Hugging Face under a non-commercial research license.