Transform Reality into Fantasy: Live2Diff AI Instantly Stylizes Your Videos

Most people like

In today's digital landscape, businesses are increasingly turning to smart custom chatbots to enhance customer interactions and streamline operations. By leveraging advanced AI technology, these chatbots can provide personalized support, answer queries in real-time, and significantly improve user experiences. Whether you're looking to boost sales, improve customer service, or automate repetitive tasks, investing in custom chatbot development is a strategic move for any forward-thinking organization. Explore the transformative potential of chatbots and how they can drive growth and engagement for your brand.

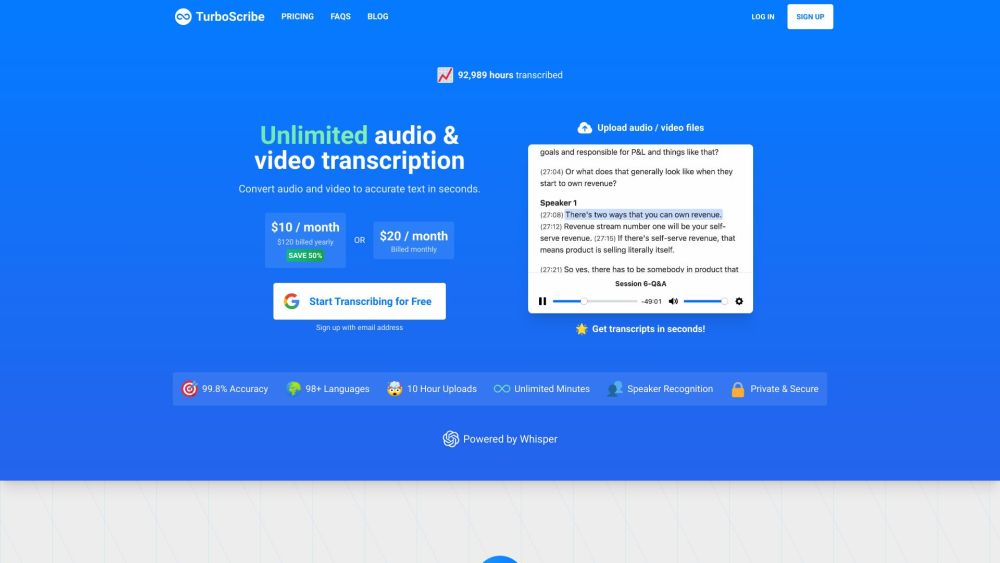

Experience unlimited AI transcription services delivering an impressive 99.8% accuracy across more than 98 languages. Unlock the power of seamless communication and transcription today!

Introducing Labnote, a groundbreaking platform that revolutionizes the research experience for scientists. By offering structured lab notes and leveraging advanced machine learning, Labnote enhances productivity and streamlines the entire research process.

In today’s fast-paced world, effective communication is essential, making spoken fluency a critical skill for learners. A language teaching machine designed specifically for improving spoken fluency can revolutionize the way individuals practice and refine their speaking abilities. By combining advanced technology with tailored learning techniques, this innovative tool helps users gain confidence and proficiency in their spoken language, making it an invaluable asset for both educators and learners alike. Discover how this cutting-edge machine can transform your language journey and elevate your conversational skills to new heights.

Find AI tools in YBX

Related Articles

Refresh Articles