Together AI has made waves in the AI community by providing developers free access to Meta’s cutting-edge Llama 3.2 Vision model through Hugging Face.

The Llama-3.2-11B-Vision-Instruct model enables users to upload images and interact with AI that analyzes and describes visual content.

0:02/14:43 Are you ready for AI agents?

This launch presents developers with an incredible opportunity to experiment with advanced multimodal AI technology without the high costs typically associated with large-scale models. Accessing the model is as simple as obtaining an API key from Together AI.

Meta’s ambition for the future of artificial intelligence is evident, with a keen focus on models capable of processing both text and images—defined as multimodal AI.

With Llama 3.2, Meta pushes the limits of AI capabilities, while Together AI plays a pivotal role in democratizing access to these innovations through a user-friendly, free demo.

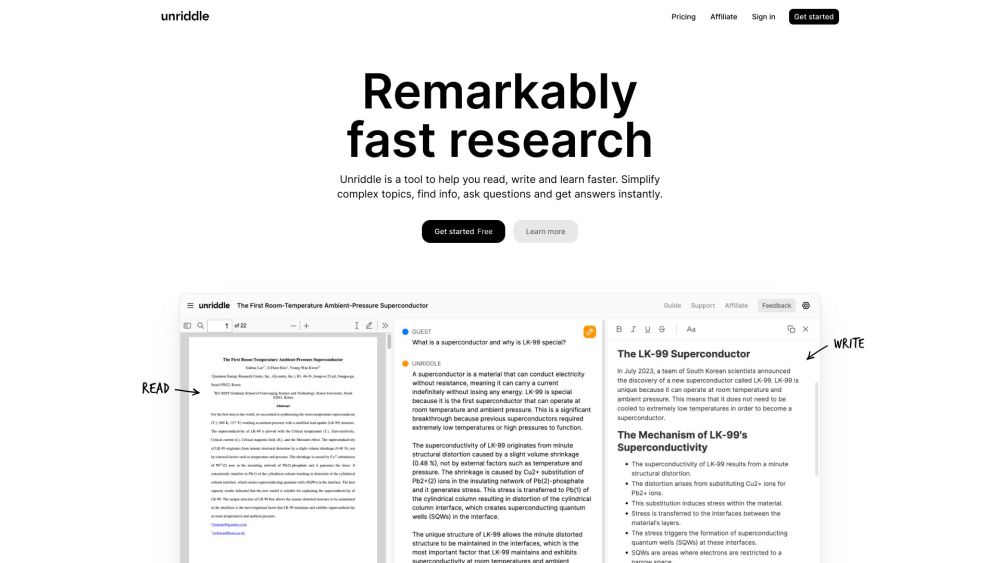

Together AI offers a streamlined interface to access the Llama 3.2 Vision model, highlighting the straightforward use of advanced AI with just an API key and adjustable parameters. (Credit: Hugging Face)

Unleashing Vision: Meta's Llama 3.2 Enhances AI Accessibility

Meta's Llama models have been at the forefront of open-source AI since the release of the first version in early 2023, challenging proprietary models like OpenAI’s GPT. The recent launch of Llama 3.2 at Meta’s Connect 2024 event introduces vision capabilities, enabling the model to interpret and analyze images alongside text.

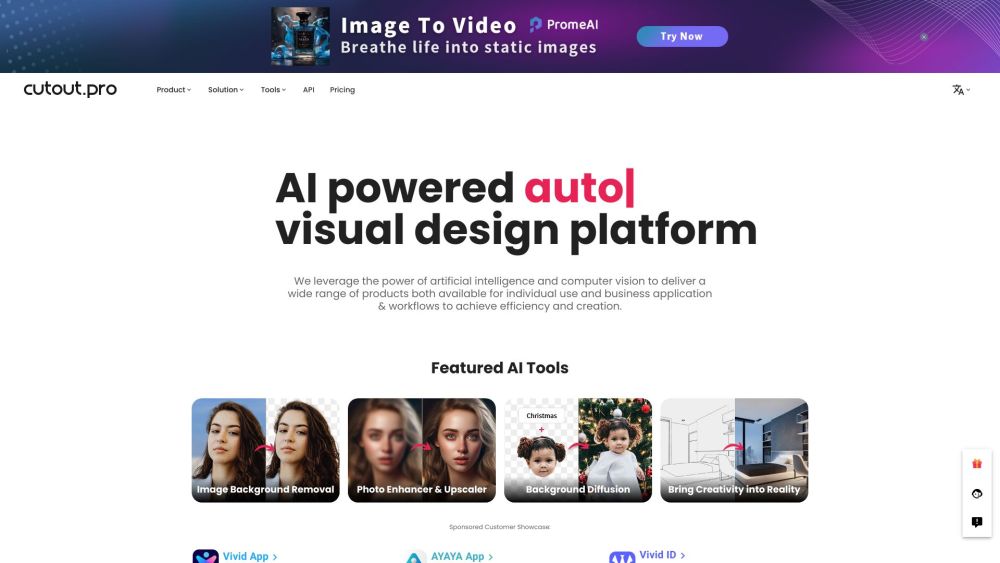

This advancement expands the potential application landscape, facilitating everything from sophisticated image-based search engines to AI-driven UI design assistants. The introduction of the free Llama 3.2 Vision demo on Hugging Face ensures these capabilities are more accessible than ever.

Developers, researchers, and startups can now explore the model’s multimodal features by simply uploading images and interacting with the AI in real time. Powered by Together AI's optimized API infrastructure, the demo emphasizes speed and cost efficiency.

From Code to Reality: Getting Started with Llama 3.2

Getting started with the model is straightforward: obtain a free API key from Together AI. Developers can register for an account on Together AI’s platform, which includes $5 in free credits. After setting up the key, users can input it into the Hugging Face interface to upload images and engage with the model.

The setup process takes just minutes, providing immediate insights into how AI generates human-like responses to visual inputs. For instance, users can upload screenshots of websites or product photos, prompting the model to produce detailed descriptions or answer questions about the images.

For enterprises, this opens up avenues for accelerated prototyping and development of multimodal applications. Retailers could leverage Llama 3.2 for visual search features, while media companies might utilize the model for automated image captioning.

The Bigger Picture: Meta’s Edge AI Vision

Llama 3.2 is part of Meta’s broader strategy toward edge AI, where smaller models can operate on mobile and edge devices without relying on cloud infrastructure. While the 11B Vision model is available for free testing, Meta has introduced lightweight versions with as few as 1 billion parameters for on-device use.

These smaller models can run on mobile processors from Qualcomm and MediaTek, making AI capabilities accessible to a wider array of devices. In an era where data privacy is critical, edge AI offers secure solutions by processing data locally, crucial for industries like healthcare and finance where sensitive information must be protected.

Meta’s commitment to modifiability and open-source nature ensures that businesses can customize these models for specific tasks without sacrificing performance.

Beyond the Cloud: Llama 3.2 and Edge AI Innovation

Meta's dedication to openness with the Llama models stands in contrast to the trend of proprietary AI systems. With Llama 3.2, Meta reinforces the belief that open models can accelerate innovation by enabling a larger community of developers to collaborate and experiment.

Meta CEO Mark Zuckerberg highlighted at the Connect 2024 event that Llama 3.2 represents a “10x growth” in model capabilities since its previous version, positioning it to lead the industry in both performance and accessibility.

Together AI's role in this ecosystem is equally significant. By providing free access to the Llama 3.2 Vision model, Together AI serves as a vital ally for developers and enterprises aiming to integrate AI into their products.

Together AI CEO Vipul Ved Prakash stated that their infrastructure is designed to support businesses of all sizes in deploying these models in production environments, whether on cloud or on-premises.

The Future of AI: Open Access and Its Implications

Llama 3.2 is currently available for free on Hugging Face, but Meta and Together AI have clear ambitions for enterprise adoption. While the free tier marks the beginning of the journey, developers aiming to scale their applications may need to transition to paid plans as usage grows. For now, the free demo offers a low-risk opportunity to engage with state-of-the-art AI technology—a potential game-changer for many.

As the AI landscape evolves, the distinction between open-source and proprietary models continues to blur. For businesses, the key takeaway is that open models like Llama 3.2 are prepared for real-world applications. With partners like Together AI easing access, entry barriers to cutting-edge AI have never been lower.

Want to try it yourself? Visit Together AI’s Hugging Face demo to upload your first image and discover what Llama 3.2 can do.