Alibaba Unveils Open Source Qwen 1.5-110B Model, Matching Performance of Meta's Llama 3-70B

Most people like

Unthread is a powerful automation tool designed to enhance customer support within Slack, offering a range of essential features for streamlined communication and efficiency.

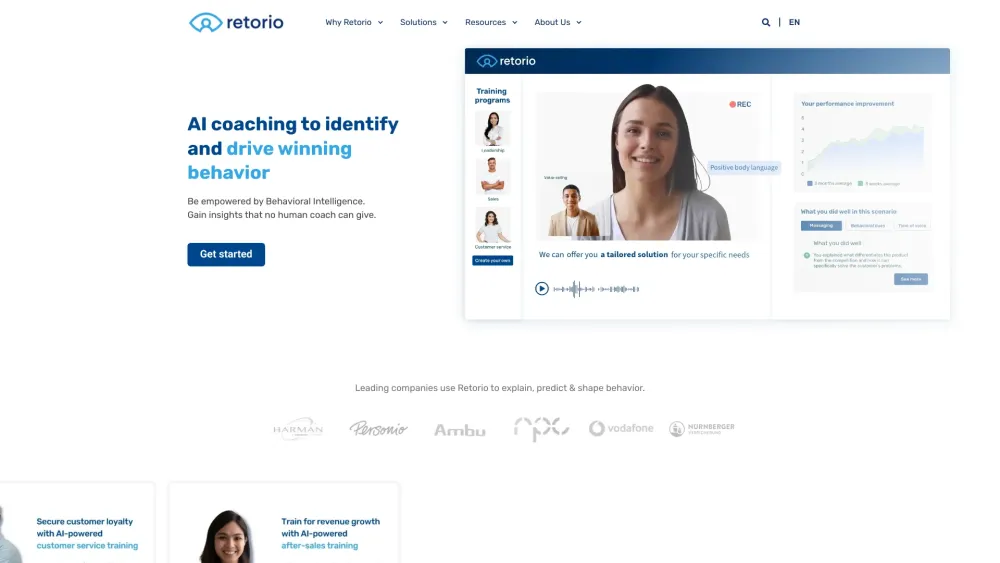

Unlocking the potential of a performance-driven culture hinges on effective Learning and Development (L&D) strategies powered by Behavioral Intelligence. By integrating insights from behavioral science, organizations can enhance employee engagement, streamline training programs, and foster a more dynamic workplace. This approach not only promotes continuous learning but also drives organizational success by aligning employee growth with business goals. Discover how to cultivate a thriving performance culture through innovative L&D practices backed by Behavioral Intelligence.

Introducing our AI-Powered Transcription and Translation Service: Revolutionizing the way you convert speech into text and translate languages effortlessly. Experience fast, accurate, and reliable results tailored to your needs, making communication seamless and efficient across various platforms. Unlock the potential of artificial intelligence to enhance your workflow and connect with a global audience like never before.

Discover the advantages of cloud providers offering GPU rentals, perfect for a wide range of computing tasks. Whether you need enhanced processing power for machine learning, video rendering, or complex simulations, GPU rentals present a flexible and cost-effective solution. Explore how these services can elevate your projects and drive innovation in today's data-driven landscape.

Find AI tools in YBX