Anthropic Launches Claude 3: AI Capabilities Approaching Human-level Performance Amid Intensifying Competition

Most people like

Discover the power of an AI-driven tool designed specifically for efficient document management and processing. This innovative solution streamlines your workflow, enhances organization, and improves accessibility, making it essential for businesses looking to optimize their document handling processes. Explore how this AI tool can transform the way you manage and process documents, boosting productivity and efficiency.

88stacks is a cutting-edge AI image generator designed for producing a wide array of stunning AI-generated images. Whether you're looking to create artwork, illustrations, or unique visuals, 88stacks harnesses advanced technology to transform your ideas into captivating images.

Transform your online presence with AI-powered website translation services, now available in 93 languages. Enhance user experience and reach a global audience effortlessly. Discover how our advanced translation technology can help you communicate effectively across linguistic barriers, ensuring that your website resonates with diverse cultures and markets.

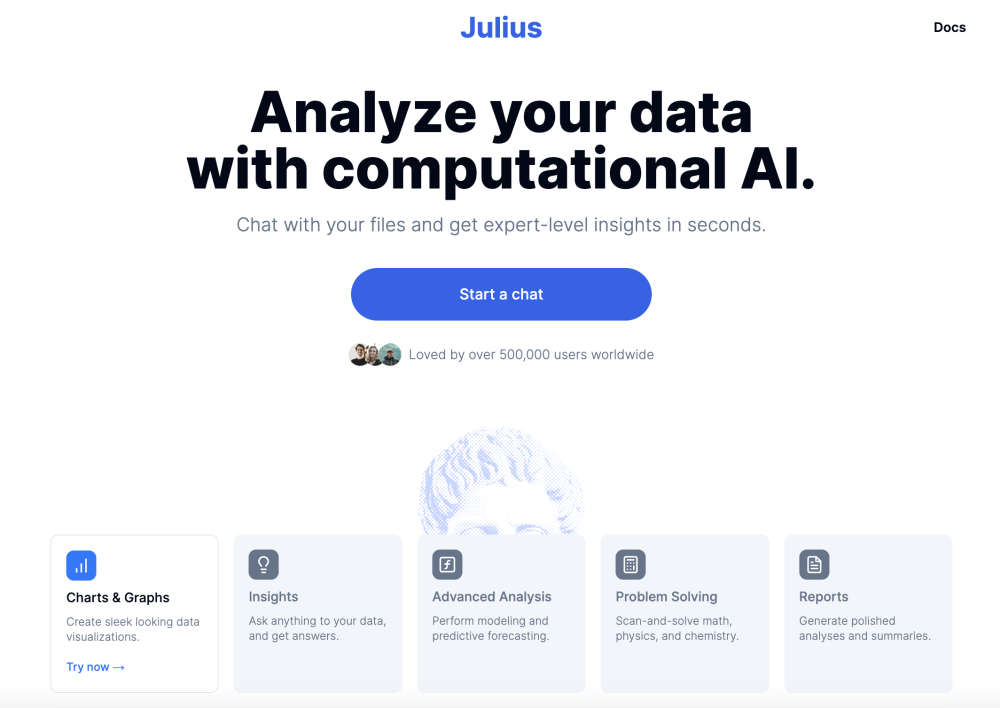

Unlock the power of AI-driven data analysis and visualization with our innovative AI data analyst toolkit. Designed to streamline your data exploration process, this tool transforms complex datasets into engaging visual insights, empowering you to make informed decisions. Discover how our AI data analyst can elevate your analytics game by enhancing your ability to interpret data and drive business success.

Find AI tools in YBX