California Governor Rejects Decision on AI Safety Bill SB 1047: Implications and Future Prospects

Most people like

Discover an all-in-one marketing platform designed to automate your tasks, streamline your workflows, and boost customer engagement effortlessly. Perfect for businesses seeking efficiency, this comprehensive solution simplifies your marketing efforts while maximizing your impact.

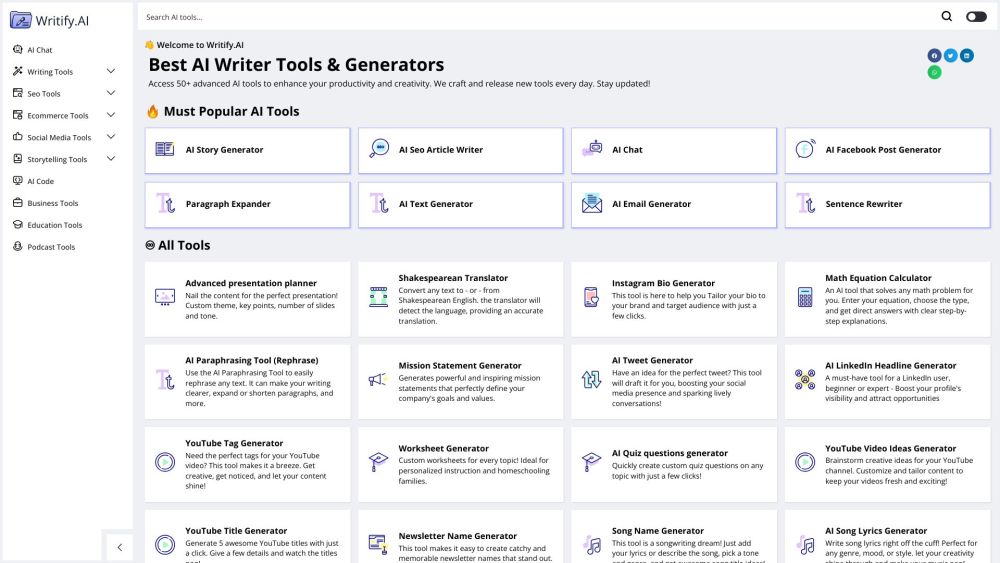

Unlock Your Potential with Our AI Tool Suite for Enhanced Productivity and Creativity

Discover how our powerful AI tool suite can transform your workflow, boost productivity, and ignite your creative spark. Tailored for individuals and teams alike, these innovative solutions streamline tasks, enhance collaboration, and inspire original ideas. Explore the future of work with cutting-edge AI technologies designed to maximize your efficiency and foster creativity in every project. Embrace a smarter way to achieve your goals today!

CodeStory is an innovative AI-driven editor designed to simplify coding tasks for developers. With its intelligent features, CodeStory enhances productivity and helps programmers code more efficiently.

Find AI tools in YBX