Google Unveils Innovative Techniques for Training Robots Using Video and Large Language Models

Most people like

Hubtype provides a cutting-edge conversational app platform that revolutionizes automated customer service. Experience seamless interaction and enhanced efficiency in customer support with our innovative solutions.

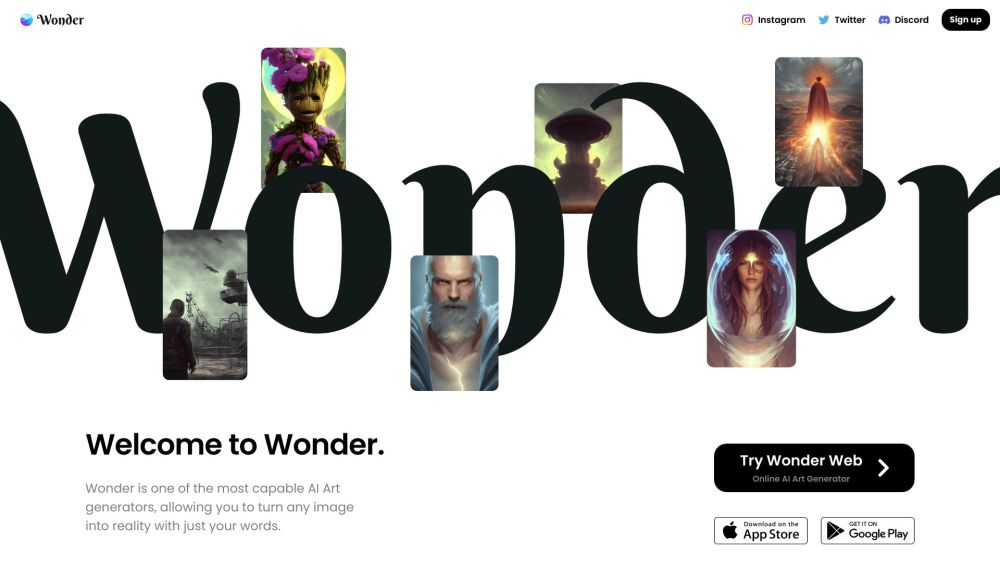

Unlock the power of creativity with our guide to transforming text into breathtaking digital art. Discover how to harness innovative tools and techniques that allow you to turn words into mesmerizing visuals. Whether you're an aspiring artist or a seasoned pro, learn how to elevate your artwork by creating stunning digital designs straight from your imagination.

Unlock instant learning with our AI-powered flashcards app. Experience a smarter way to study, enhance retention, and boost your knowledge efficiently. Transform your learning journey today!

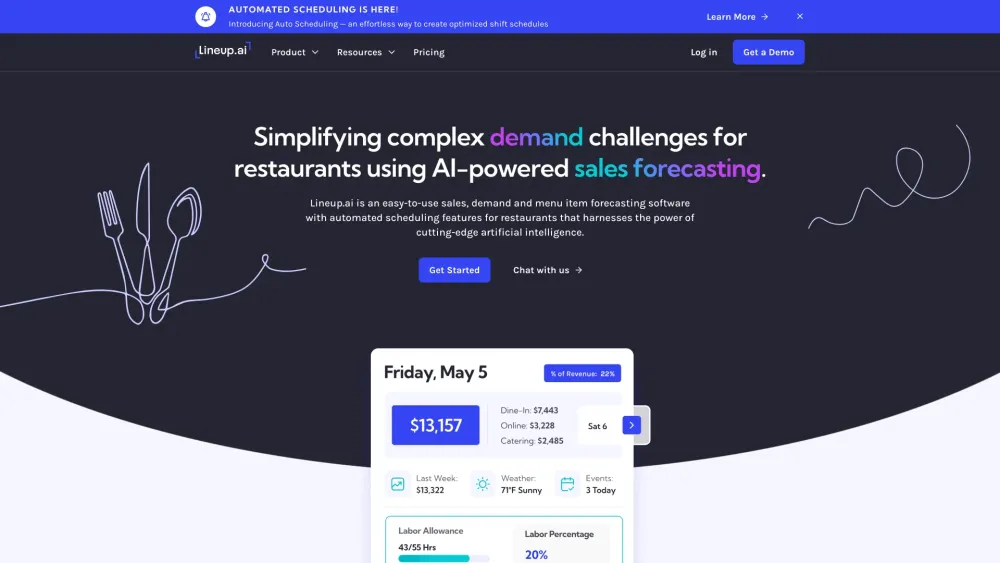

Enhance your restaurant's efficiency with cutting-edge AI forecasting software. Streamline operations, improve inventory management, and elevate customer satisfaction by harnessing the power of artificial intelligence. Discover how AI-driven insights can transform your dining establishment into a well-oiled machine, ready to adapt to market trends and boost profitability.

Find AI tools in YBX

Related Articles

Refresh Articles