OpenAI Unveils Sora Model: Effortlessly Create High-Quality One-Minute Videos in Just One Sentence

Most people like

Discover an AI-powered garden design app that generates thousands of innovative ideas for transforming your outdoor space. With advanced features and personalized recommendations, this app makes it easy to envision and create your dream garden. Unleash your creativity and explore endless possibilities for garden design today.

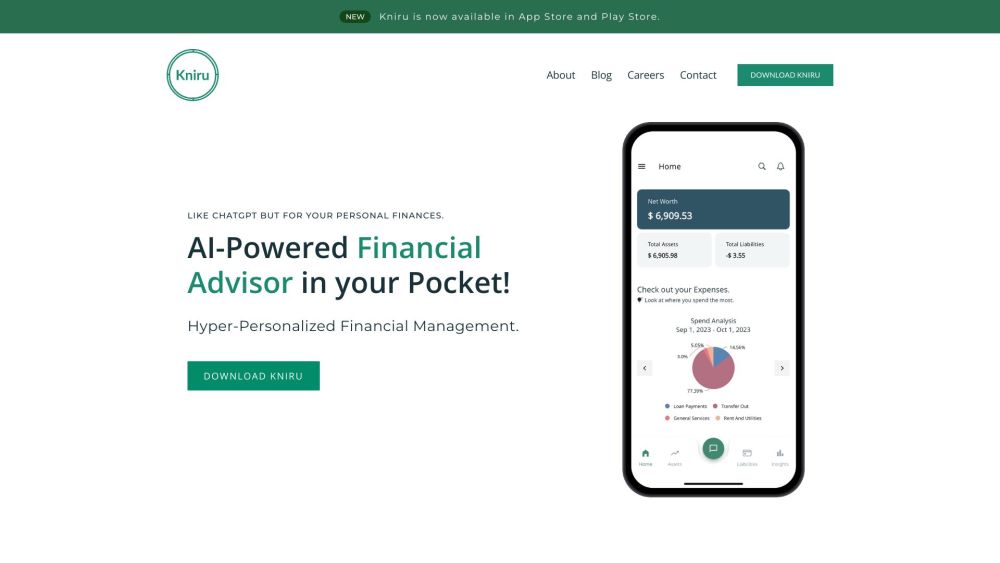

Introducing an AI-Powered Personal Finance Management App: Your Smart Solution for Managing Money.

Introducing the leading AI platform for creators, designed to empower innovators and enhance creative processes. With our powerful tools and resources, unleash your imagination and transform your ideas into reality. Join a community of forward-thinking creators who are revolutionizing their fields with advanced AI technology.

Find AI tools in YBX

Related Articles

Refresh Articles