Google Creates External Council to Promote 'Responsible' AI Development

Most people like

Explore the ultimate travel guides and product reviews hub! Our platform is designed for adventurers and savvy shoppers alike, offering in-depth insights on top destinations and must-have items. Whether you're planning your next getaway or searching for the best gear, we provide you with reliable information and expert reviews to elevate your experience. Join us in discovering the world, one guide and review at a time!

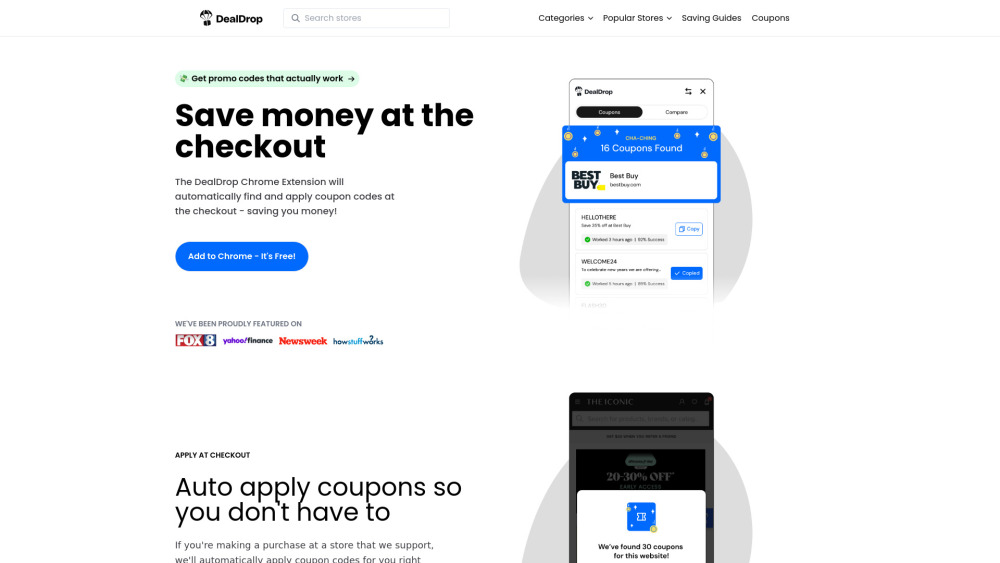

Discover our powerful browser extension designed to automate coupon applications and compare prices effortlessly. Save time and money while shopping online, as our tool ensures you never miss a deal. Experience smarter shopping with seamless integration for unbeatable savings and informed purchasing decisions.

Find AI tools in YBX