Google Lens Introduces Video Search: Effortlessly Explore Visual Content with New Feature

Most people like

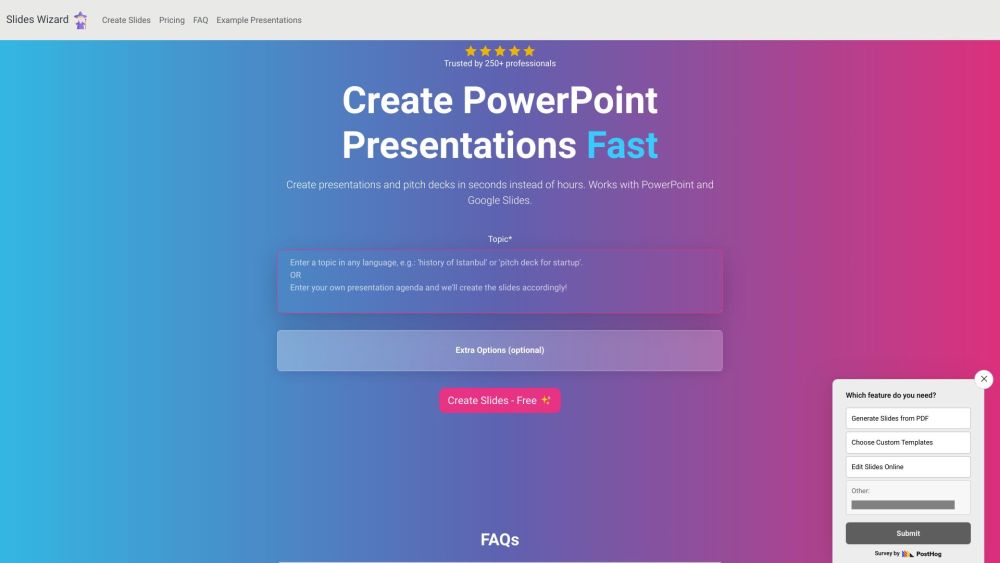

Create Stunning Presentations in Seconds

In today’s fast-paced world, the ability to generate captivating presentations quickly is essential for professionals and students alike. With cutting-edge tools at your fingertips, you can craft eye-catching slides in mere seconds, allowing you to focus on delivering your message effectively. Whether you’re preparing for a business meeting, academic lecture, or creative pitch, our streamlined process empowers you to produce high-quality presentations effortlessly. Say goodbye to hours of design work and hello to instant presentation perfection!

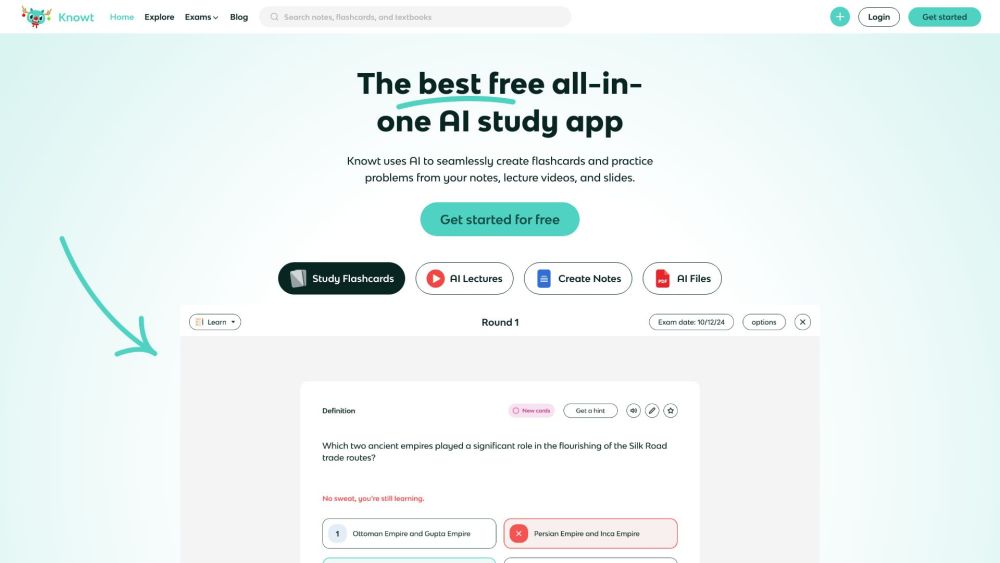

Harness the power of AI-driven study tools to enhance your learning success. These innovative resources are designed to optimize your study experience, improve retention, and elevate your academic performance. Discover how AI can transform your approach to education and help you achieve your goals more effectively.

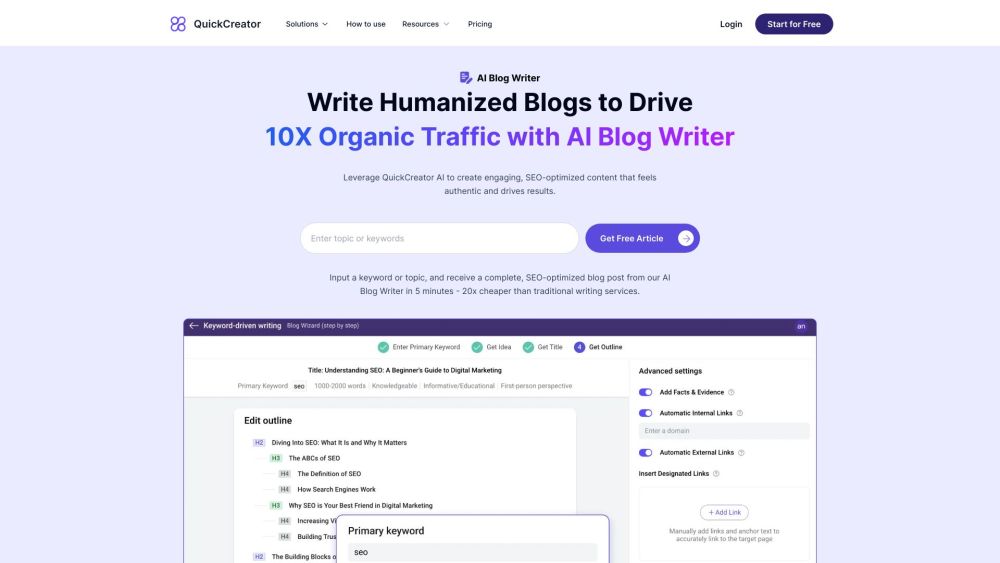

In today's competitive online environment, creating engaging and SEO-optimized blog content is essential for driving traffic and enhancing visibility. By harnessing the power of AI, you can effortlessly generate high-quality blog posts that not only resonate with your audience but also rank well on search engines. Discover how AI-driven tools can streamline your content creation process, allowing you to focus on strategies that fuel your online success.

Find AI tools in YBX