Is Apple's AI Revolution Around the Corner? Introducing the Ferret Multimodal Model for iPhone

Most people like

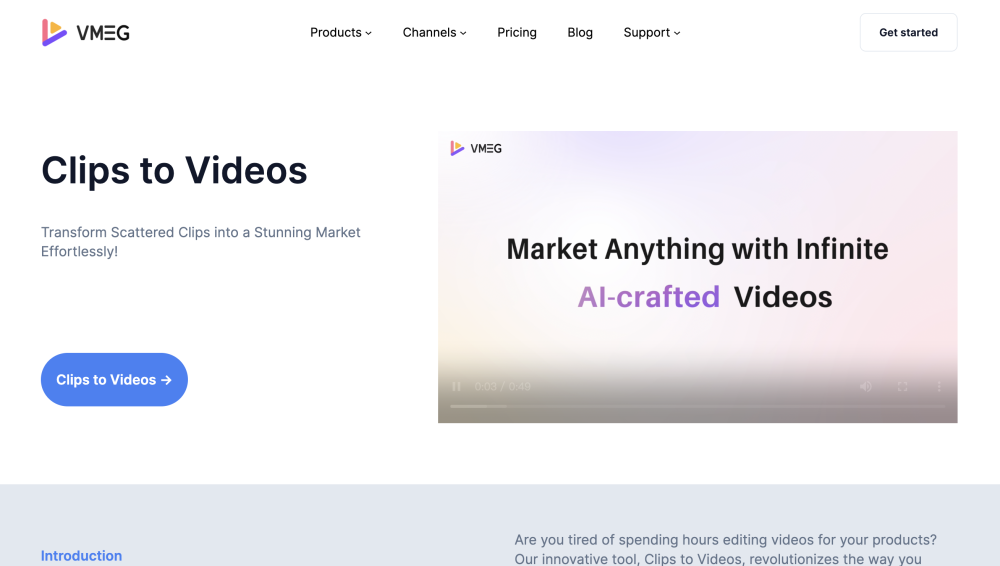

Unleash the power of artificial intelligence to turn your raw clips into stunning marketing videos that captivate your audience. Discover innovative tools and strategies to enhance your visual content, boost brand visibility, and drive engagement. Elevate your marketing efforts effortlessly with AI-driven video transformation!

Explore over 300 professional headshot styles and 100+ customizable backgrounds, with 21 million+ portraits created, trusted by more than 50,000 satisfied customers. Discover AI-generated business headshots, portraits, avatars, and profile pictures perfect for resumes, portfolios, and blogs—transform your personal brand today!

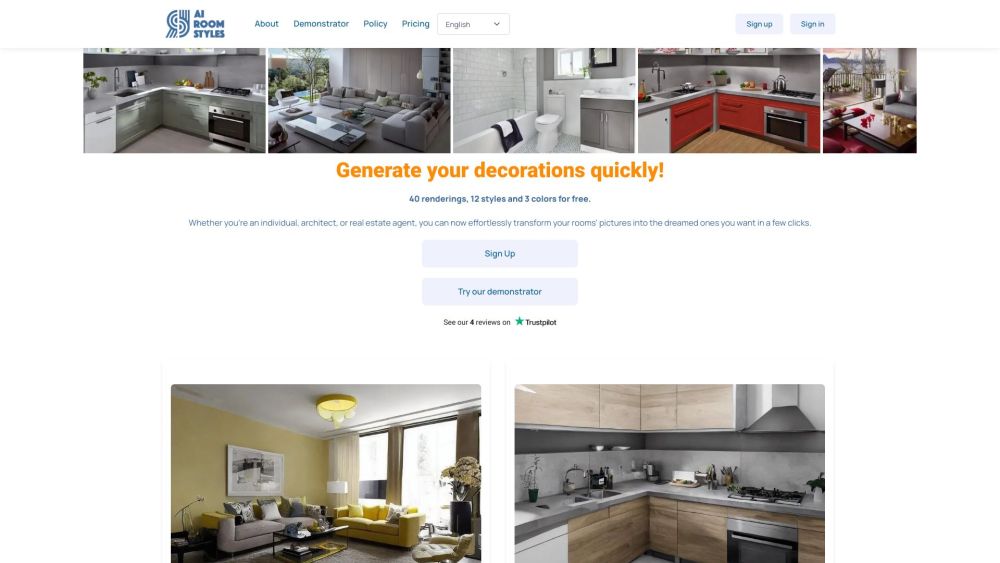

Elevate your room photos into stunning designs effortlessly with AI Room Styles. Transform your space with cutting-edge technology that brings your interior visions to life.

Unlock Your Potential with AI-Powered Interview Preparation and Feedback

Are you ready to ace your next job interview? Our AI-driven platform offers tailored interview preparation and insightful feedback to boost your confidence and enhance your performance. By leveraging cutting-edge technology, we help you refine your responses and develop key skills to stand out in competitive job markets. Whether you're a recent graduate or a seasoned professional, our tools equip you to succeed in any interview situation. Boost your chances of landing your dream job today!

Find AI tools in YBX

Related Articles

Refresh Articles