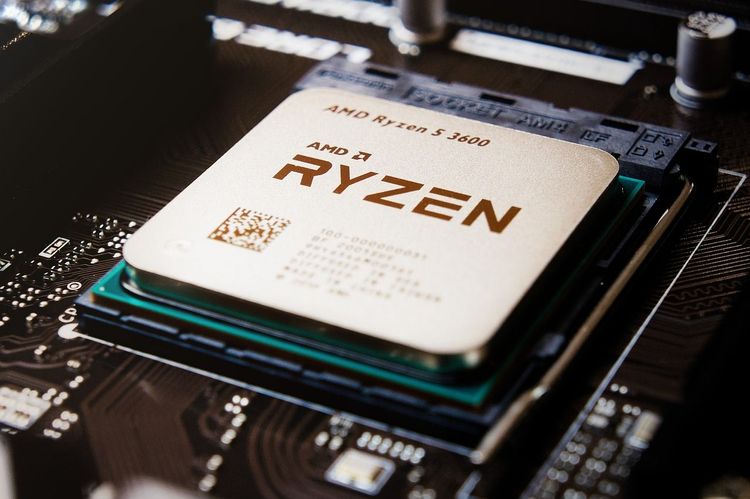

Many experts contend that OpenAI trains its extensive models using data from public video platforms. This has sparked controversy regarding how this prominent artificial intelligence (AI) company acquires its data. Similar concerns are emerging across several major U.S. tech firms. Key questions arise: Are the data sources used for AI model training lawful? What boundaries should define the use of public data? These considerations are critical for nations developing future AI regulations.

The article highlights OpenAI's widely used video creation tool, Sora, which depends on vast amounts of data likely sourced from YouTube—a widely accepted notion. YouTube has consistently prohibited automated bulk downloading and commercial use of its content, implementing bandwidth throttling to combat scraping. However, it is uncertain what technical methods OpenAI utilized to bypass YouTube’s defenses.

In a recent interview, OpenAI’s Chief Technology Officer Mira Murati stated the company uses “public and licensed data” to train Sora. However, she was uncertain when asked whether this includes YouTube content. Xiang Ligang, director of the Zhongguancun Information Consumption Alliance, emphasized that AI models can be categorized into general-purpose and industry-specific types. OpenAI primarily focuses on general models, often scraping data from public platforms like YouTube. Copyright issues for images and videos tend to be more clearly defined than for text, leading to greater controversy. For instance, when capturing a video or image, that content remains the creator's; however, if integrated into an AI model, it could transform into the model's property, raising significant legal questions.

As the global AI sector flourishes, many startups are striving to gather quality data for training their AI models. Insiders familiar with OpenAI's operations have reported the existence of a "secret team" tasked with gathering training data without thorough investigations into its origins. This suggests an unspoken agreement among major tech players: as long as they can scrape data from others, they tacitly accept similar practices from competitors. However, some view this consensus as a serious risk within the rapidly evolving AI landscape.

The rise of generative AI has ignited a technological race, revealing the unclear and immature guidelines currently governing the legality and ethics of data usage. There is increasing concern over the potential harm posed by complex generative AI systems, coupled with public ignorance about data sourcing, utilization, and precautions regarding sensitive information. Tech companies have been reticent, leaving their internal teams struggling to clarify the underlying processes.

Recently, numerous leading U.S. companies have faced legal challenges regarding their AI training data sources. A class-action lawsuit was filed against Nvidia in California, with three writers alleging that its NeMo AI platform trained natural language generation capabilities using content from pirated literary websites. Additionally, 18 writers, including the author of "A Song of Ice and Fire," have sued OpenAI for copyright infringement, while The New York Times has taken legal action against OpenAI and Microsoft for alleged unauthorized content use. Many designers have also filed lawsuits against AI art generators like Midjourney and Stability AI for unauthorized use of their copyrighted works. These cases could significantly influence regulatory changes.

Amid increasing scrutiny, some tech giants have remained tight-lipped about their data sources. Last year, two U.S. lawmakers introduced the “Artificial Intelligence Model Transparency Act,” which would mandate the disclosure of training data sources, acquisition methods, and algorithms used by AI models. However, it remains uncertain when this bill may progress towards formal legislation.

Global regulatory frameworks are still in development. Chinese economist Pan Helin emphasizes that countries differ vastly in their approaches to data acquisition for training AI models, with some emphasizing transparency while others prioritize data security. Regardless, a common understanding is that data selection should not compromise personal privacy. Companies scraping public data must ensure it is processed to remove identifying information.

Last year, China implemented regulations governing AI model management, but further legal frameworks concerning intellectual property will be necessary as AI technology continues to evolve. Europe is making significant advances in this area; there is growing awareness of the risks posed by cybercriminals engaging in illegal AI creation, including ransomware. The European Parliament has recently passed the "AI Act," establishing stringent rules to ensure AI use respects fundamental rights such as privacy and non-discrimination. Officials describe this legislation as the first comprehensive and binding regulation for trustworthy AI.

Pan Helin points out that, in contrast to the U.S. approach of relying heavily on publicly available data, mainstream AI models in China are typically trained on internally collected data. In the U.S., brokers often acquire data from platforms; however, much of it is scraped from publicly accessible content, including social media, which is then pre-labeled and processed. Ultimately, while companies like Google and OpenAI assert their practices of using copyrighted material for AI training conform to current norms, these claims await scrutiny from regulatory bodies or courts.