Nvidia Competitors Shift Focus to Building Alternative Chips for AI Products

Most people like

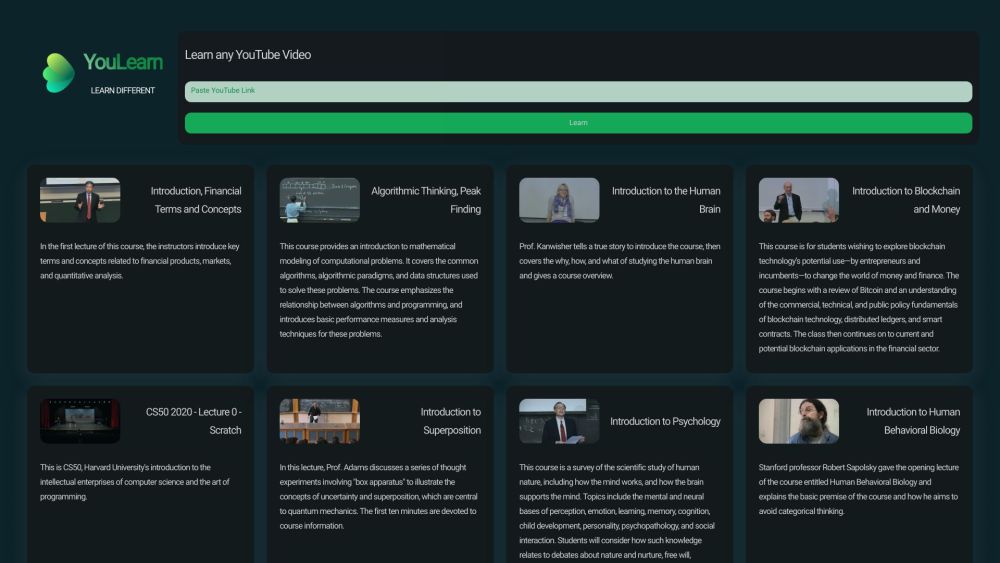

YouLearn is an AI tutor platform that helps personalize education with summaries and study resources.

We introduce the best solutions for AI interpretation, voice recording, virus protection, and creating New Year's cards. These tools are designed to enhance communication, protect data, and assist in preparing for special events in both daily life and business. Please take a look to find the best solution for your needs.

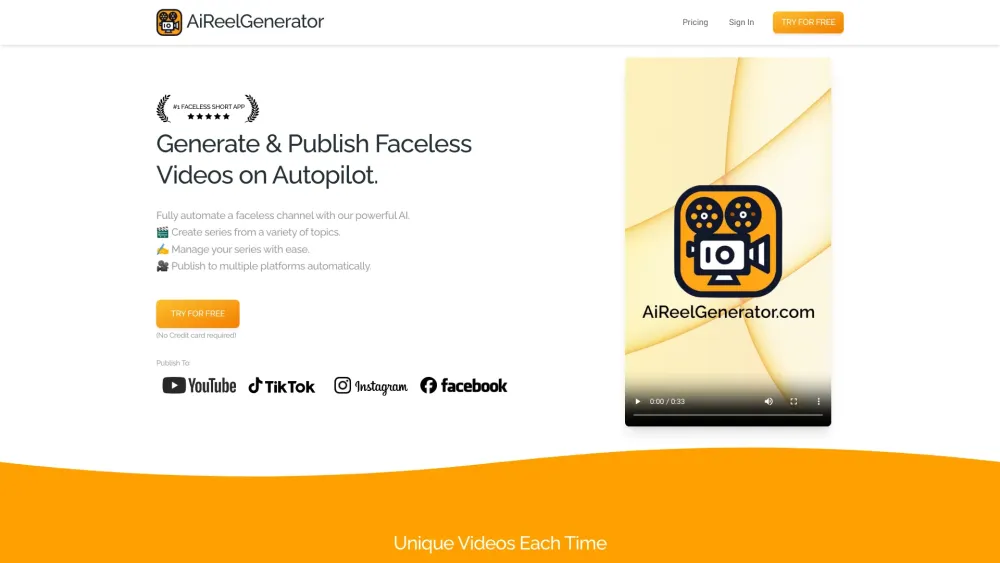

Discover how AI is revolutionizing content creation by generating faceless videos for various platforms. In this evolving digital landscape, these innovative tools provide creators with an exciting way to engage audiences while maintaining privacy and anonymity. Explore the potential of AI-driven faceless videos in enhancing your online presence across social media, marketing, and more.

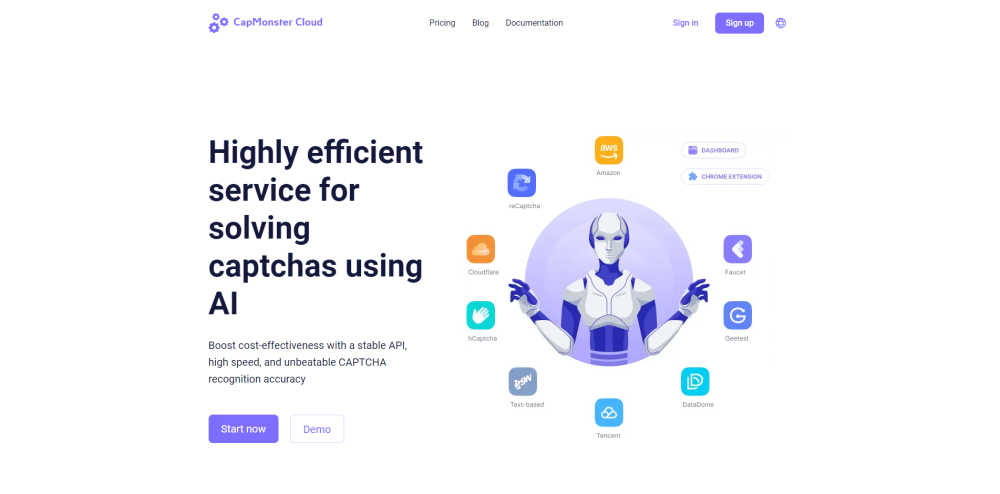

CapMonster Cloud: an advanced AI-powered CAPTCHA-solving service that streamlines the automation of solving a wide range of CAPTCHAs, including reCAPTCHA, hCaptcha, and more. With its innovative technology, CapMonster Cloud enhances efficiency and user experience in navigating online platforms.

Find AI tools in YBX