OpenAI Partners with Meta to Label AI-Generated Images for Transparency

Most people like

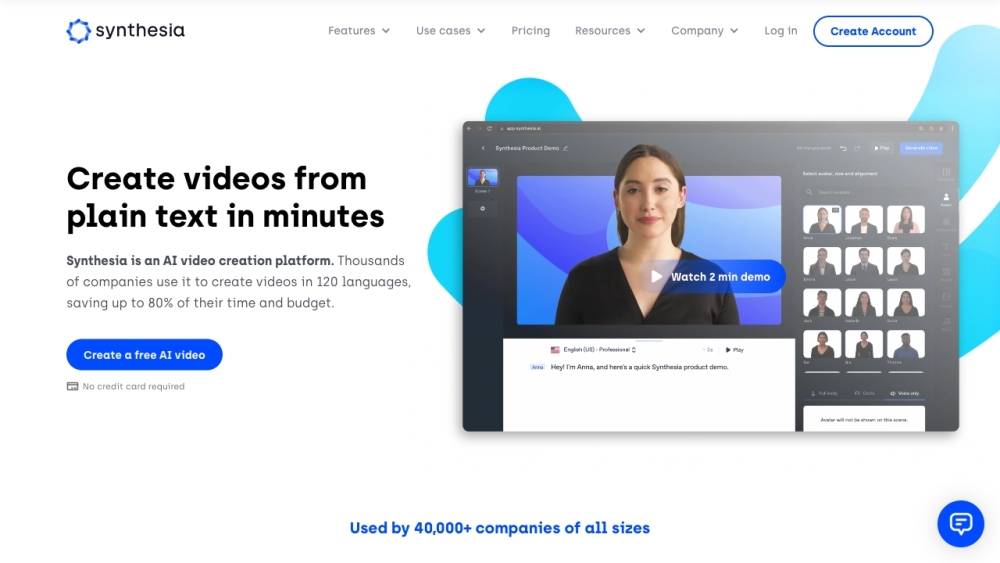

Introducing the Ultimate AI Video Editor for Crafting Engaging Social Media Clips

Unlock the power of our advanced AI video editor, designed to help you effortlessly create stunning videos for social media. Whether you’re a seasoned creator or just starting out, this intuitive tool simplifies the editing process, allowing you to produce eye-catching content that captivates your audience and enhances your online presence.

Easily produce professional videos with AI avatars and multilingual voiceovers—no actors or expensive equipment needed. Unlock a seamless video creation experience today!

Introducing our AI-native Search API designed specifically for web and mobile applications. Enhance your user experience with cutting-edge search functionalities that leverage artificial intelligence to deliver highly relevant results. Our API optimizes search efficiency, ensuring seamless integration and improved engagement for your applications. Unlock the power of intelligent search capabilities today!

Unlock the power of mathematics with our Advanced Math AI Solver, designed to assist users of all levels in solving complex equations and understanding intricate concepts. Whether you're a student aiming to enhance your problem-solving skills or a professional seeking quick solutions, our cutting-edge AI technology provides step-by-step explanations, making even the toughest math challenges manageable. Explore the future of learning with our versatile and user-friendly tool, tailored to elevate your mathematical proficiency and boost your confidence in tackling any math problem.

Find AI tools in YBX