IBM is advancing artificial intelligence (AI) security through its open-source initiative, the Adversarial Robustness Toolbox (ART).

Today, ART launches on Hugging Face, providing tools designed for AI users and data scientists to minimize potential security risks. Although this release marks a new milestone, ART has been in development since 2018 and was contributed to the Linux Foundation as an open-source project in 2020. Over the years, IBM has continued to evolve ART as part of the DARPA initiative called Guaranteeing AI Robustness Against Deception (GARD).

The focus on AI security is becoming increasingly urgent as the prevalence of AI technologies rises. Common threats include training data poisoning and evasion tactics that can mislead AI models by injecting malicious data or manipulating input objects.

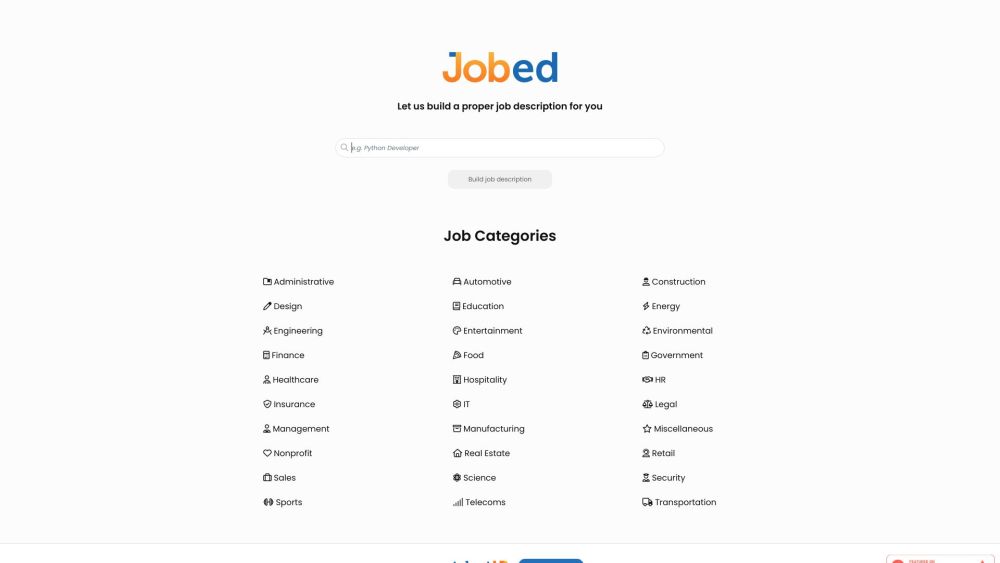

By integrating ART with Hugging Face, IBM aims to enhance accessibility to defensive AI security tools for developers, helping them mitigate threats more effectively. Organizations utilizing AI models from Hugging Face can now secure their systems against evasion and poisoning attacks while seamlessly integrating these defenses into their workflows.

“Hugging Face hosts a substantial collection of cutting-edge models,” said Nathalie Baracaldo Angel, IBM’s Manager of AI Security and Privacy Solutions. “This integration empowers the community to leverage the red-blue team tools within ART for Hugging Face models.”

The Journey of ART: From DARPA to Hugging Face

IBM's commitment to AI security predates the current generative AI boom, illustrating a proactive approach to safeguarding AI technologies. As an open-source initiative, ART is part of the Linux Foundation’s LF AI & Data project, benefiting from diverse contributions across various organizations. Additionally, DARPA has funded IBM to enhance ART's capabilities under the GARD initiative.

While ART's inclusion in the Linux Foundation remains unchanged, it now extends support to Hugging Face models. Hugging Face has rapidly gained traction as a collaborative platform for sharing AI models, and IBM has established multiple partnerships with the organization, including a joint project with NASA focused on geospatial AI.

Understanding Adversarial Robustness and Its Importance in AI

Adversarial robustness is essential for bolstering AI security. Angel explains that this concept involves recognizing potential adversarial attempts to compromise machine learning systems and proactively establishing defenses.

"The field requires an understanding of adversarial tactics to effectively protect the machine learning pipeline," she stated, emphasizing a red team approach to identify and mitigate relevant risks.

Since its inception in 2018, ART has evolved to address the changing landscape of AI threats, including various attacks and defenses across multiple modalities such as object detection, tracking, audio processing, and more.

"Recently, we've focused on integrating multi-modal models like CLIP, which will soon be available in the system," she noted. "In the dynamic landscape of security, it's crucial to continually develop new tools in response to evolving threats and defenses."