OpenAI's Latest Model: Enhanced Reasoning Abilities and Unintentional Deception

Most people like

Jenni AI transforms your writing experience by effectively tackling writer's block and offering powerful tools designed for faster, more efficient writing. Discover how Jenni AI can elevate your creativity and streamline your writing process.

Introducing the Spicy NSFW Character AI Chat Platform: Immerse yourself in an engaging and playful environment where adult-themed characters come to life through advanced AI technology. Connect with an array of personalities as you explore stimulating conversations and unique scenarios tailored to your preferences. Experience an exciting blend of fantasy and interaction, all while enjoying a safe and user-friendly space. Join today and discover the thrilling possibilities!

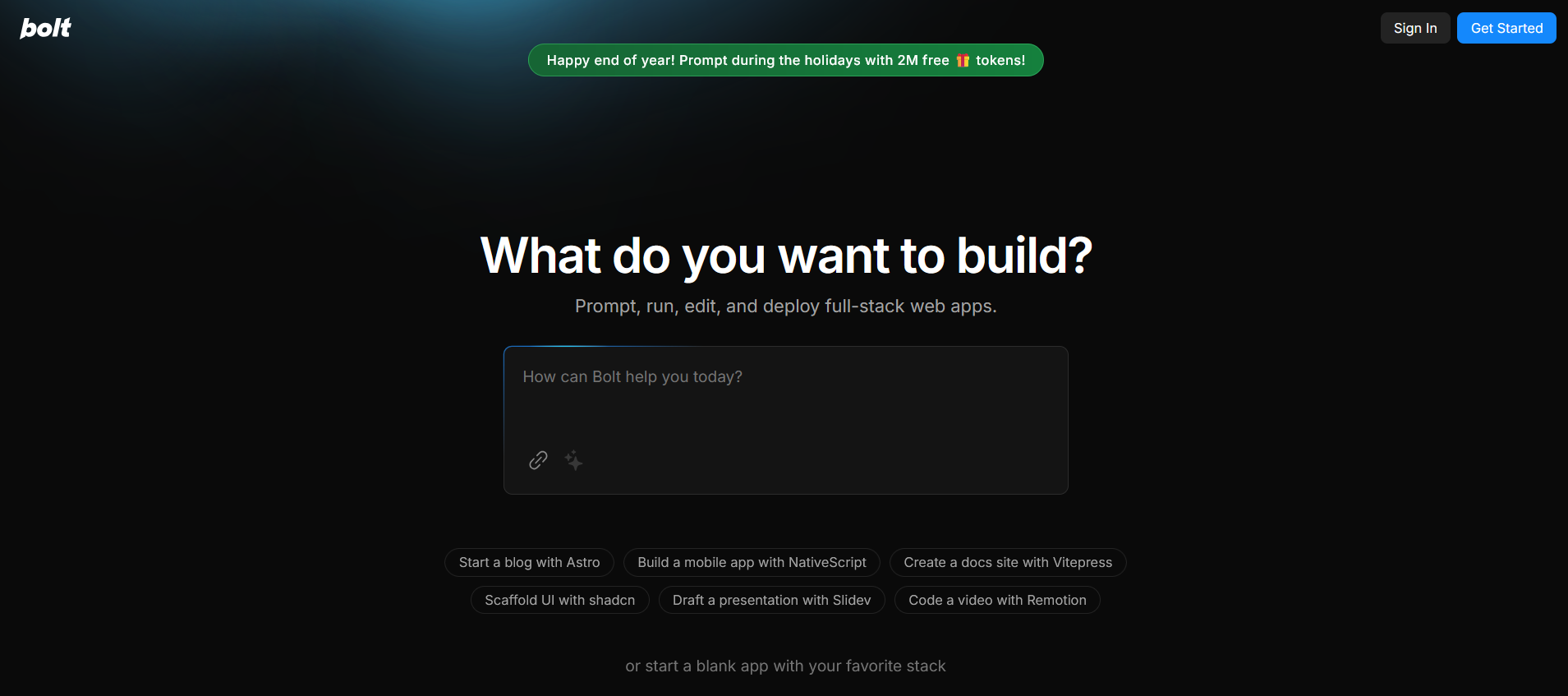

Bolt.new is an AI-powered full-stack web development platform that enables browser-based coding, deployment, and collaboration without complex local setup.

Enhancing secure global trade payments is essential in today’s interconnected economy. As businesses increasingly rely on international transactions, ensuring safety and reliability in payment processes has never been more critical. With the right measures in place, companies can minimize risks, streamline operations, and foster trust in cross-border trade. Prioritizing secure payment solutions not only protects your financial interests but also paves the way for sustainable growth in the global marketplace.

Find AI tools in YBX

Related Articles

Refresh Articles