US Lawyers Fined $5,000 for Using Fake Case Citations Generated by ChatGPT

Most people like

Introducing an AI-Powered Research Assistant for Effortless Thesis Writing: Experience a seamless writing journey with our intelligent assistant designed to minimize stress and enhance productivity. Whether you're exploring complex topics or organizing your ideas, our innovative tool streamlines the research process, helping you craft a compelling thesis with ease.

Explore the vibrant world of live music with our comprehensive guide on tickets, festivals, and setlists. Discover how to secure your spot at the hottest events, catch the latest performances, and keep up with the setlists from your favorite artists. Join us in celebrating the electrifying atmosphere of live concerts and the unforgettable experiences they bring!

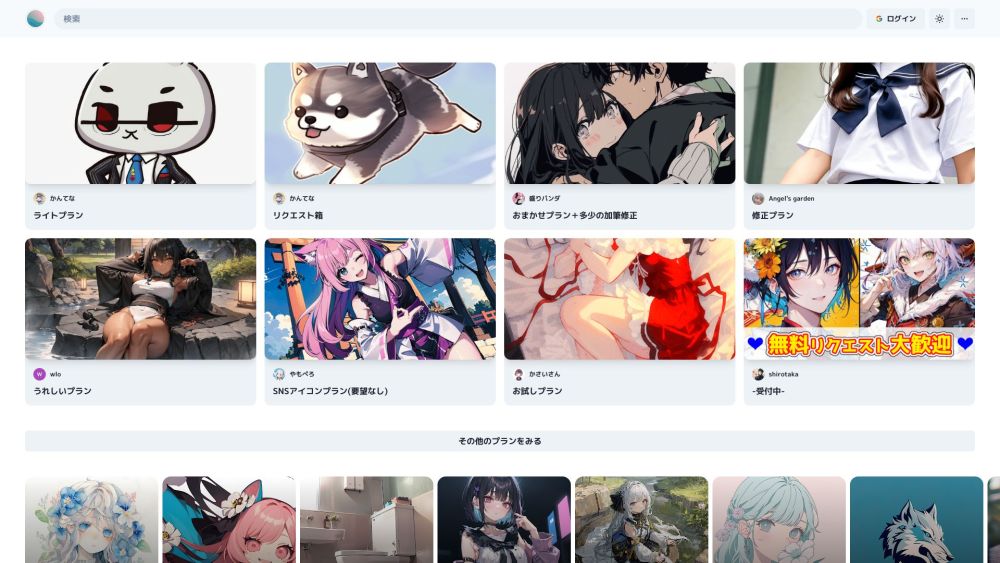

Discover Prompton, an innovative AI illustration platform that provides a diverse range of design services tailored to your creative needs.

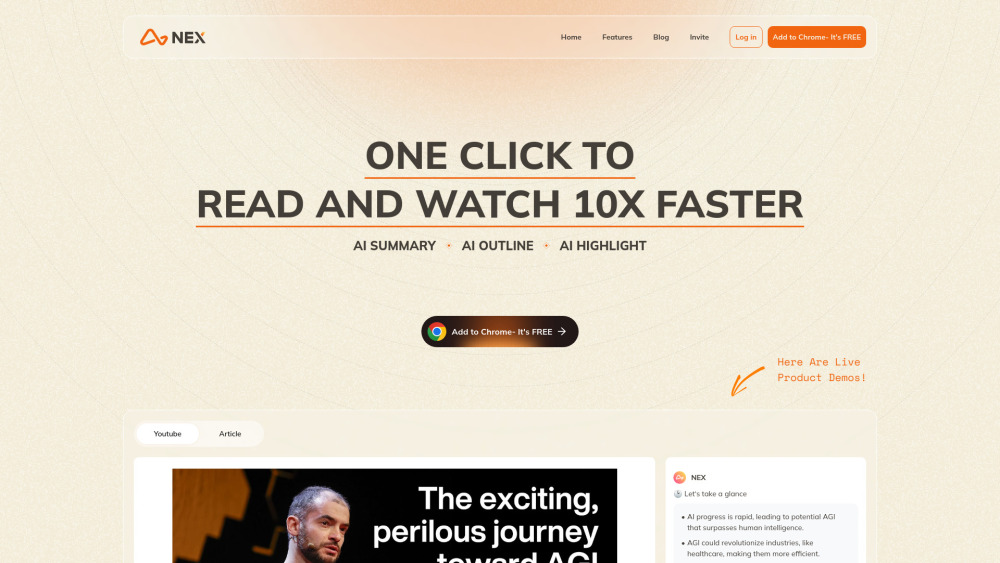

Introducing our AI-powered summary and outline generator—designed specifically for YouTube videos and articles! This innovative tool streamlines content creation by providing concise summaries and structured outlines, making it easier for creators and marketers to enhance their videos and articles. Elevate your content strategy, improve viewer engagement, and save time with our advanced features tailored for optimal SEO performance. Start crafting compelling summaries and outlines today!

Find AI tools in YBX