Introducing Huawei's First Comprehensive AI Training Toolchain: ModelEngine Officially Launched

Most people like

Discover an AI platform designed to enhance personalized language learning and interaction. Experience tailored lessons that adapt to your individual learning style, making language acquisition engaging and effective. Embrace the future of language education with cutting-edge technology that connects you with native speakers and immersive content. Start your journey to fluency today!

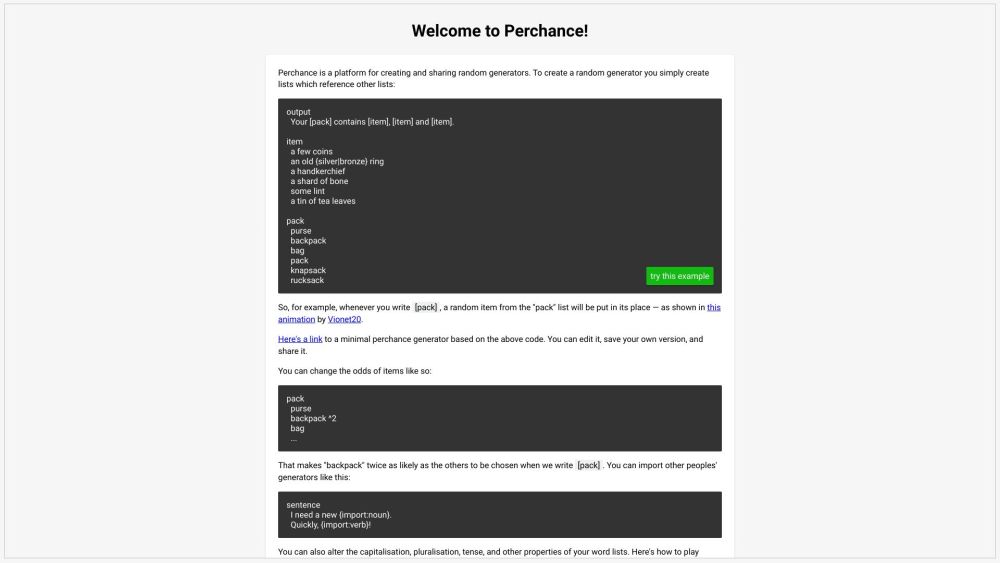

Discover an innovative platform designed to create random generators effortlessly. This versatile tool allows users to design custom generators for a wide range of applications, from games and decision-making to brainstorming and creative storytelling. Whether you’re a developer, educator, or a creative enthusiast, this platform empowers you to whip up original randomization solutions that engage and inspire. Unlock your creativity today with the ultimate randomness generator creation platform!

Introducing our revolutionary AI-powered platform designed to enhance class discussions. With cutting-edge technology, this tool fosters engaging conversations, promotes critical thinking, and streamlines participation for educators and students alike. Transform your learning environment today with our intuitive platform that revolutionizes the way discussions are facilitated in the classroom.

Find AI tools in YBX