Human vs. AI Game Test: Discover How 32% of Participants Struggle to Distinguish Between Humans and Robots

Most people like

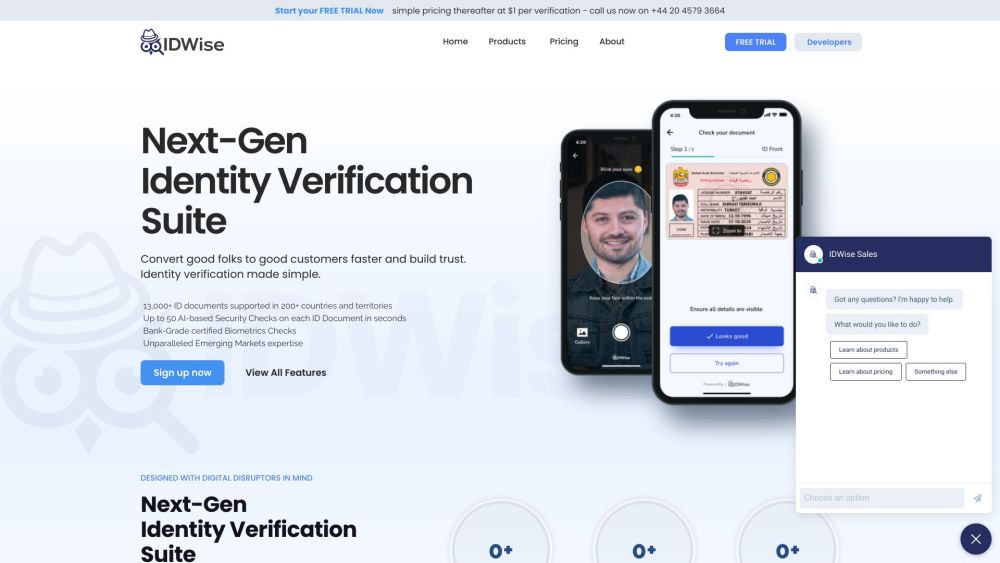

IDWise is an innovative AI-powered identity verification solution designed to assist businesses in seamlessly authenticating customer identities. With advanced technology, IDWise enhances security and builds trust, making identity verification efficient and reliable.

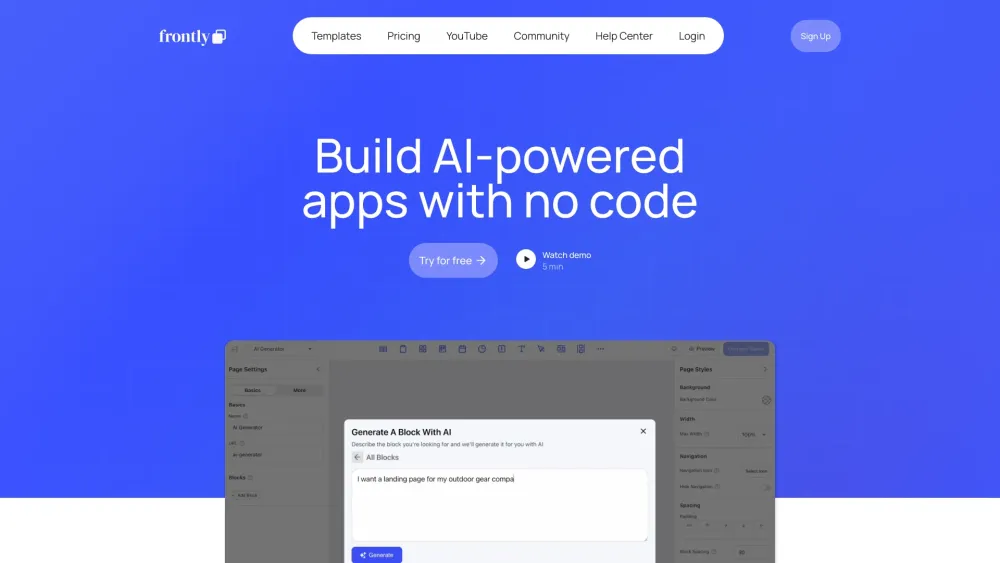

In today's fast-paced digital landscape, AI-powered no-code app development empowers individuals and businesses alike to create applications without extensive programming knowledge. This innovative approach leverages artificial intelligence to streamline the development process, enabling users to build, deploy, and customize apps quickly and efficiently. As organizations strive for agility and cost-effectiveness, embracing no-code platforms equipped with AI capabilities becomes essential for staying competitive in an ever-evolving market. Discover how this transformative technology can revolutionize your app creation journey.

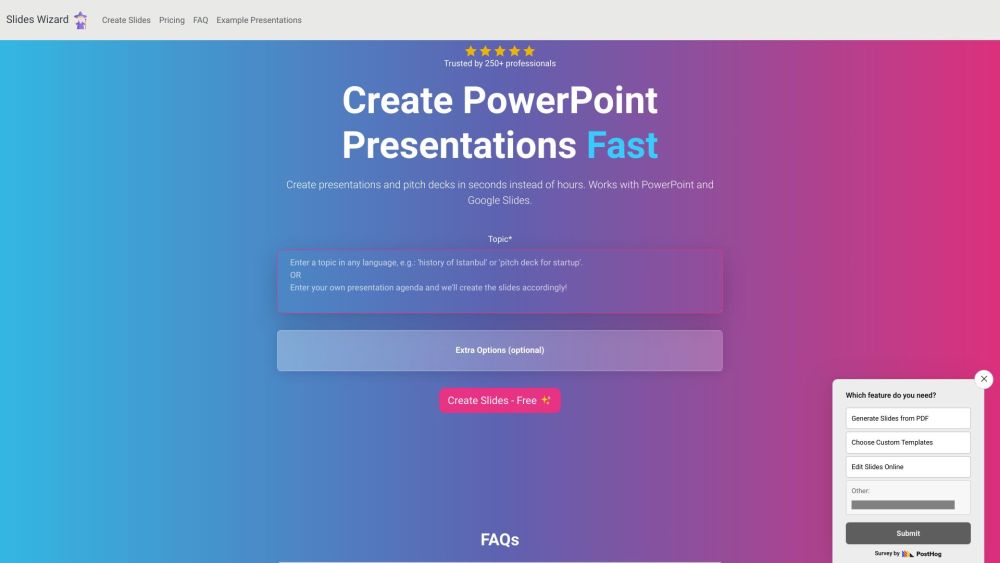

Create Stunning Presentations in Seconds

In today’s fast-paced world, the ability to generate captivating presentations quickly is essential for professionals and students alike. With cutting-edge tools at your fingertips, you can craft eye-catching slides in mere seconds, allowing you to focus on delivering your message effectively. Whether you’re preparing for a business meeting, academic lecture, or creative pitch, our streamlined process empowers you to produce high-quality presentations effortlessly. Say goodbye to hours of design work and hello to instant presentation perfection!

Explore the captivating synergy between age-old tarot traditions and cutting-edge technology. As the world evolves, so too does the way we connect with ancient wisdom. Discover how modern innovations are revitalizing tarot readings, enhancing accessibility, and bringing new dimensions to this age-old practice. Embrace the fusion of the mystical and the digital, and unlock the potential for deeper insights and transformative experiences through tarot in the digital age.

Find AI tools in YBX