Sam Altman Resigns from OpenAI's Safety Committee: Key Insights and Implications

Most people like

AI Video Tools for Influencers: Elevate Your Content Creation

As an influencer, capturing your audience's attention is critical in today's fast-paced digital landscape. AI video tools empower you to enhance your content effortlessly, allowing for more dynamic storytelling and engaging visuals. With the right AI technology at your disposal, you can streamline the video production process and create high-quality, eye-catching videos that resonate with your followers. Discover how these innovative tools can transform your content strategy and boost engagement across platforms.

Introducing Validator AI, a powerful platform designed to assist entrepreneurs by offering immediate support and constructive feedback on their business ideas. With Validator AI, turning your concepts into thriving ventures has never been easier!

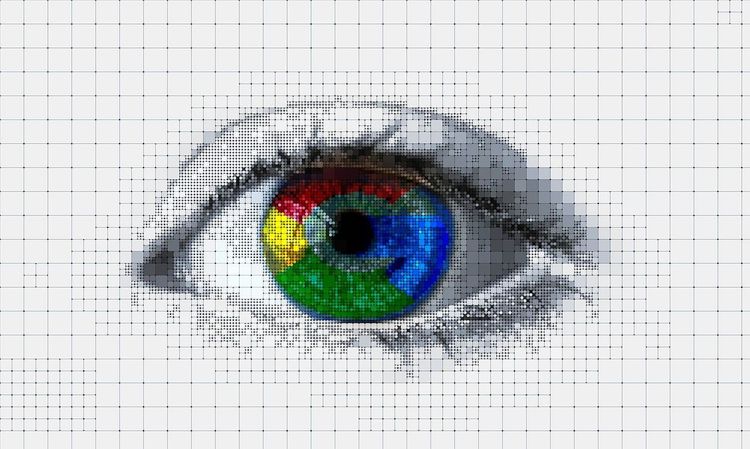

Discover the power of an AI image generator that seamlessly transforms your text into stunning, high-quality visuals. Experience the future of creativity as this innovative tool converts your words into captivating images, enhancing your projects and elevating your storytelling. Whether for marketing, social media, or personal expression, this AI-driven platform unlocks endless possibilities for visual content creation.

Are you pressed for time but need to deliver captivating presentations? With our innovative tools, you can craft stunning presentations in just minutes. Say goodbye to long hours of preparation and hello to dynamic, professional slides that will capture your audience's attention. Discover how easy it is to transform your ideas into visually appealing presentations without sacrificing quality.

Find AI tools in YBX