AI Technology Creates Fake Fingerprints to Bypass Biometric Scanners

Most people like

In recent years, deep learning has revolutionized the field of image generation, enabling machines to create stunning visuals from scratch. These advanced models leverage intricate neural networks to learn patterns and features from vast datasets, resulting in remarkably realistic images. This guide explores the key technologies behind deep learning image generation, highlighting their applications, benefits, and potential impact on creative industries. Join us as we delve into the fascinating world of AI-driven art and the future possibilities it holds.

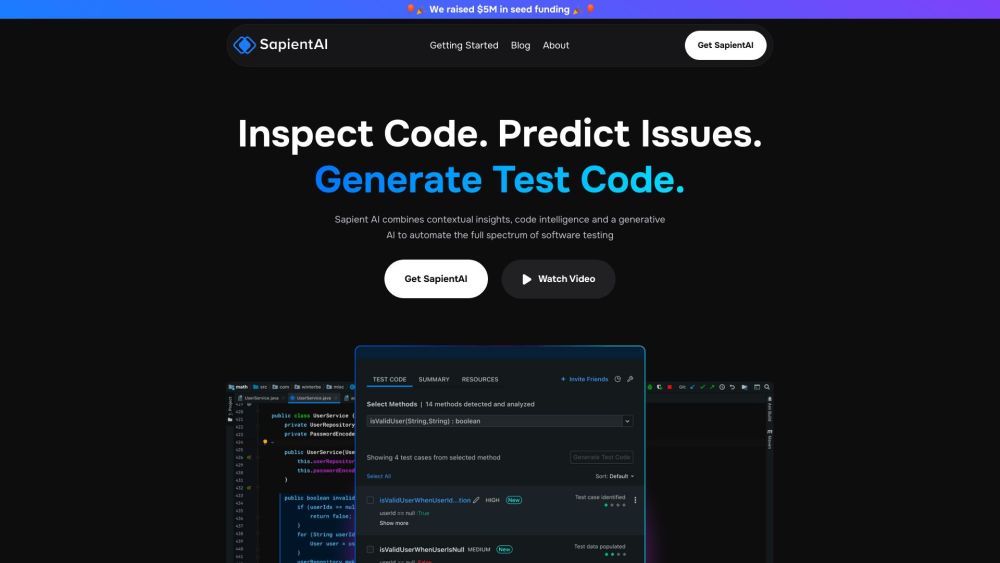

Sapient.ai automates the generation of unit tests, enabling developers to concentrate on building innovative new features. This streamlined process enhances productivity by allowing teams to allocate their time and resources more effectively.

In today's digital landscape, businesses are increasingly turning to smart custom chatbots to enhance customer interactions and streamline operations. By leveraging advanced AI technology, these chatbots can provide personalized support, answer queries in real-time, and significantly improve user experiences. Whether you're looking to boost sales, improve customer service, or automate repetitive tasks, investing in custom chatbot development is a strategic move for any forward-thinking organization. Explore the transformative potential of chatbots and how they can drive growth and engagement for your brand.

Welcome to our AI insights platform, designed specifically for creators and businesses looking to harness the power of artificial intelligence. Our platform provides valuable data-driven insights that empower users to enhance creativity, improve decision-making, and drive growth. Discover how AI can transform your projects and strategies, paving the way for success in today's competitive landscape. Explore our features to unlock your full potential!

Find AI tools in YBX