A year ago, London-based Stability AI, renowned for its open-source image-generating model Stable Diffusion, quietly introduced Dance Diffusion, a model designed to generate music and sound effects from text descriptions. This marked Stability AI's entry into generative audio, highlighting the company's significant investment and keen interest in the evolving field of AI music creation tools. However, progress on the generative audio front seemed stagnant for nearly a year after the Dance Diffusion announcement, particularly concerning Stability's initiatives.

The research organization funded by Stability to create Dance Diffusion, Harmonai, ceased updates on the model sometime last year. Traditionally, Stability has provided resources and computational power to external teams rather than solely developing models in-house. Dance Diffusion never transitioned to a more user-friendly format; as of now, installing it necessitates direct engagement with the source code, owing to the absence of a user interface.

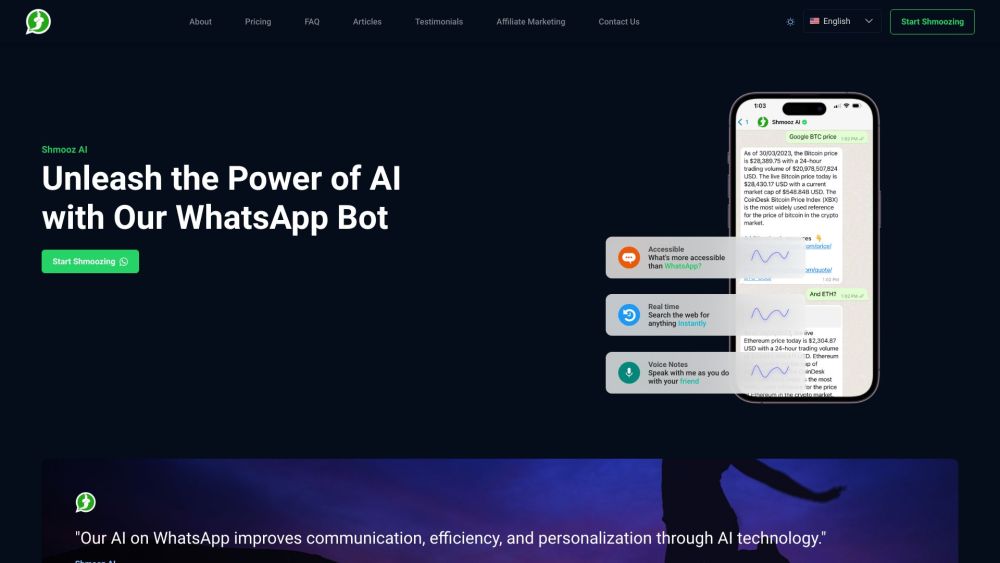

Under pressure from investors to convert over $100 million in funding into profitable products, Stability AI is re-engaging with audio in a substantial manner. Today, the company unveiled Stable Audio, which it claims is the first platform capable of generating “high-quality” 44.1 kHz music for commercial use through a technique known as latent diffusion. Stability asserts that Stable Audio’s model, which encompasses approximately 1.2 billion parameters, allows unprecedented control over synthesized audio content and duration compared to previous generative music tools.

“Stability AI is dedicated to unlocking human potential by developing foundational AI models across various content types or ‘modalities,’” stated Ed Newton-Rex, VP of audio for Stability AI, in an email interview. “Beginning with Stable Diffusion, we've expanded into languages, coding, and now music. We envision a future of generative AI that embraces multimodality.”

Unlike Harmonai, which primarily trained Dance Diffusion, Stable Audio was developed by Stability’s formalized audio team, which drew inspiration from Dance Diffusion. Harmonai now functions as Stability's AI music research division, according to Newton-Rex, who joined Stability last year after notable roles at TikTok and Snap.

“Dance Diffusion produced short, random audio clips from a restricted sound palette, requiring users to fine-tune the model for control. In contrast, Stable Audio can create longer audio tracks while allowing users to guide the generation through text prompts and specify desired durations,” Newton-Rex explained. “Some prompts yield impressive results, particularly in genres like EDM and ambient music, while others may produce more unconventional output, such as melodic, classical, and jazz compositions.”

Despite multiple requests for early access, Stability declined to allow previews of Stable Audio prior to its launch. Currently, users can access Stable Audio only through a web application that went live this morning. In a decision that may disappoint advocates of open research, Stability has not announced any plans to release the Stable Audio model as open source.

Nevertheless, Stability shared samples to demonstrate the model's capabilities across various genres, predominantly EDM, using brief prompts. While the samples could have been selected for their best qualities, they appear notably more coherent and musical than many prior audio generation outputs from models like Meta’s AudioGen, Riffusion, and OpenAI’s Jukebox. While they’re not flawless — exhibiting some lack of creativity — the ambient techno track below, for instance, sounds distinctly professional.

To achieve optimal results with Stable Audio, crafting a prompt that effectively conveys the nuances of the desired song — including genre, tempo, instruments, and emotional resonance — is crucial. For the techno track, Stability used the prompt: “Ambient Techno, meditation, Scandinavian Forest, 808 drum machine,” among others.

Another generated track was prompted with: “Disco, Driving, Drum Machine, Synthesizer, Bass, Piano.”

For comparison, I also tested the same prompt using MusicLM via Google’s AI Test Kitchen, which produced a response that, while decent, felt repetitive and less sophisticated.

One remarkable aspect of the songs generated by Stable Audio is their coherent duration, lasting up to 90 seconds. While other AI models can create longer pieces, their outputs frequently devolve into random noise after a few seconds. This coherence can be attributed to latent diffusion, a technique similar to that used in Stable Diffusion for images. This model learns to gradually decrease noise from an initial, nearly silent audio clip until it aligns with the text prompt over several steps.

Stable Audio is capable of generating various audio outputs, not limited to songs — it can even mimic the sound of a passing car or a drum solo.

While Stability AI's approach to latent diffusion in music generation isn’t entirely unprecedented, it stands out for its musicality and sound fidelity. The model was trained with a vast dataset from the commercial music service AudioSparx, which provided a selection of approximately 800,000 tracks from independent artists. Stability took precautions to exclude vocal tracks to avoid potential ethical and copyright issues.

Interestingly, Stability does not filter prompts that might lead to legal complications. Unlike Google's MusicLM, which restricts prompts referencing specific artists, Stable Audio currently does not impose similar limitations, raising potential concerns about copyright infringement. Newton-Rex noted that while the tool is primarily focused on generating instrumental music, which limits risks around misinformation and deepfakes, they are actively addressing emerging challenges in AI and plan to implement content authenticity measures in their audio models.

Homemade tracks using generative AI to replicate familiar sounds have recently gained popularity, with a Discord community even releasing an entire album featuring an AI-generated imitation of Travis Scott's voice, which drew ire from his record label.

Music labels quickly act against AI-generated tracks on streaming platforms, citing intellectual property issues. However, the openness regarding the legal status of "deepfake" music remains murky. Last month, a federal judge ruled that AI-generated art is not copyrightable, but the U.S. Copyright Office is still deliberating over the copyright implications related to AI-generated content.

Stability AI contends that users of Stable Audio can monetize their creations, albeit not necessarily secure copyright protections, which is less encompassing than other generative AI vendors' offerings. For instance, Microsoft recently announced plans to indemnify its commercial clients against copyright infringement lawsuits arising from AI-generated outputs.

Stable Audio users can choose a Pro subscription for $11.99 a month, allowing the generation of 500 commercial tracks lasting up to 90 seconds each. Those opting for a free tier can create 20 non-commercial tracks of 20 seconds in length monthly. Users wishing to utilize Stable Audio-generated music in applications or platforms with over 100,000 monthly active users must subscribe to an enterprise plan.

The terms of service for Stable Audio clarify that Stability reserves the rights to users’ prompts, songs, and usage data for various purposes, including developing future models and services. Customers also agree to protect Stability in case of any intellectual property disputes arising from tracks created with Stable Audio.

One critical question remains: Will the creators of the audio used for training Stable Audio receive any compensation? Stability has faced scrutiny over training models on artists' works without proper consent or pay.

Similar to its recent image-generating models, Stable Audio offers an opt-out option for artists. AudioSparx EVP Lee Johnson stated that around 10% of artists chose to opt out of their works being included in the training dataset for Stable Audio's initial release.

“We respect our artists' decisions to engage or decline, and we aim to offer flexibility,” Johnson stated in an email.

Under its agreement with AudioSparx, Stability has established a revenue-sharing model, allowing artists to profit from their songs if they chose to participate in initial training or help train future iterations of Stable Audio. This model resembles those being developed by Adobe and Shutterstock for their generative AI tools. However, Stability provided limited details about the specifics of this partnership, leaving artists uncertain about potential compensation for their contributions.

Artists may remain wary, given Stability CEO Emad Mostaque’s history of exaggerated claims and management controversies. Reports have surfaced highlighting financial struggles at Stability AI, including unpaid wages and the threat of losing cloud computing access due to ongoing cash burn.

Recently, Stability AI raised $25 million through a convertible note, bringing its total funding beyond $125 million, though it hasn't secured further investment at a higher valuation since its last $1 billion assessment. The company aims for a significantly higher valuation in the near future, despite challenges with revenue generation and financial sustainability.

Will Stable Audio be the turning point for Stability AI? It remains uncertain, but the obstacles ahead suggest a challenging road lies ahead.